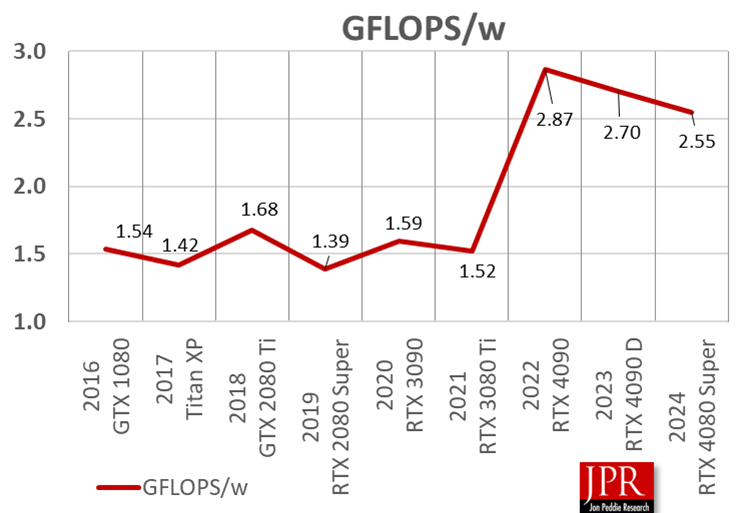

The alarm over GPU power consumption is misleading. Although power consumption has increased, the performance gains have been much more significant. From 2016 to now, the power-to-performance ratio of GPUs has doubled, though it has decreased to 178% in the last two years. Today’s GPUs deliver significantly more performance per watt due to advancements like smaller transistors and higher clock rates. While data center power consumption metrics are complex, the GFLOPS/TDP comparison remains a good measure of GPU efficiency over time.

Maybe it is clickbait or striving for sensational headlines, but the alarm and astonishment of the power consumption of GPUs are totally misleading. Not that GPU power consumption hasn’t increased; of course it has. More importantly, its processing power has also increased at a much greater rate than the power consumption. So why isn’t that the headline?

Using consumer-grade GPUs from 2016, the power (W) to performance (FLOPS) ratio doubled in six years and drifted down to 178% in the last two years.

If performance (measured in GFLOPS) is the bang, and power consumption (in TDP) is the buck, then today’s GPUs definitely give a lot more bang for the buck than those of eight years ago. That’s due to several factors, smaller transistors, changes in leakage from one node to another, higher clock rates, and more shaders.

However, from a data center management point of view, power consumption is not measured from just the GPU (TDP) but rather from a system point of view, and even that can be measured in two ways: by the rack and by the facility, which includes cooling.

Yet, you can’t really get a fair comparison because today’s data centers have significantly more GPUs than those of a few years ago.

So although not perfect, the GFLOPS/TDP comparison is a good metric for evaluating the overall power-performance ratio of GPUs over time, whether used in a gaming PC or a data center.