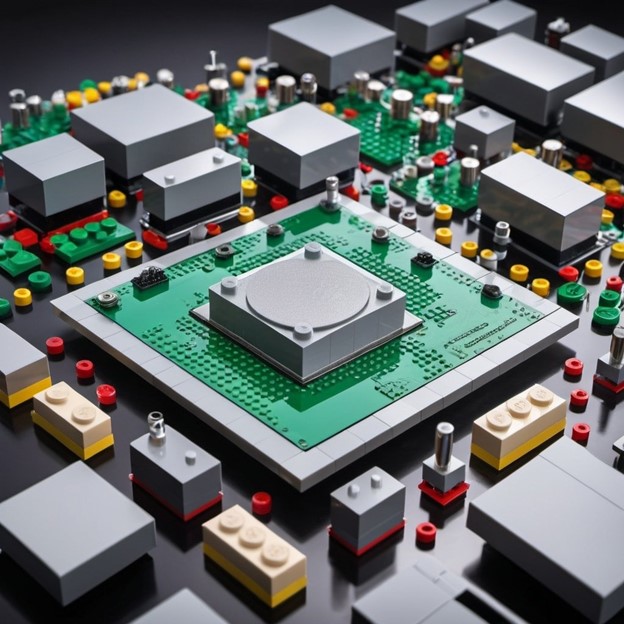

The divide between dedicated application-specific processors (ASPs) and programmable processors of AI processing is expanding. While ASPs can outperform programmable processors in specific tasks, market dynamics and economics come into play. Established players like AMD, Intel, and Nvidia are exploring both options, with Nvidia’s GPU and integrated NPU currently dominating the market. The industry is trending towards programmable processors, like complex GPUs with NPUs, assimilating specialized tasks and making dedicated ASPs less necessary. The rise of chiplets will further enable OEMs to customize processors, akin to building with Lego blocks, blurring the lines between ASPs and programmable processors.

As has been discussed here many times before, a dedicated application-specific processor (ASP) will always be able to outperform a programmable processor on a given task. The economics vary depending on the size of the market being pursued and the number of competitors.

AMD and Intel have covered this divergence, and Nvidia is rumored to be considering offering a dedicated AI ASP. SoC builders Apple and Qualcomm already have it.

Nvidia has an NPU it could pull out of its GPU (which has become a big SoC) and offer an AI ASP like AMD’s XDNA or Intel’s Gaudi.

And then there are the companies like Cerebras, Graphcore, and Tenstorrent, to name a few (there are almost a dozen now).

The decision tree for a prospective AI processor buyer is a line that is bifurcated into two, like an upside-down Y, with one branch going to the GPU and the other to an ASP. Right now, AMD and Intel don’t care which path a prospect chooses. Nvidia, however, outsells both of them combined several times over with just a GPU and an integrated NPU—why make the customer choose?

History has shown us that the GPU solution usually wins. DSPs and other ASICs were assimilated, and their stand-alone markets shrank or disappeared. The GPU and vaunted x86 are huge collections of ASPs, and the darling RISC-V is being extended every day, losing the R in its name as vector and GPU capabilities and soft DSP functions are added. Arm learned that years ago. Arm is late to the AI party, and Softbank is trying to correct that by throwing money at it.

As chiplets take on more of the market, OEMs will be able to literally order a complex multi-function processor like they’re selecting Lego parts. “I’ll have a 32-core vector SIMD, three DSPs, two video codecs, a display processor, and, oh, yeah, a 512 NPU, please—when will it be ready?”

But we’re not there yet, and so between now and chiplet utopia, we will see a lot of confusing, sometimes false claims as semiconductor companies try to win the hearts of OEMs and their customers. And behind all this are Intel, Samsung, and TSMC saying, “What!? You want how many by when? Are you nuts?”

Letting the AI genie out of the lamp has totally turned the industry upside down, and everyone is hoping it doesn’t turn out to be the Pandora’s box that got opened. But it sure is going to be exciting as hell for next few years.