AMD introduced new AI infrastructure products, including the Instinct MI325X accelerators, Pensando Pollara 400 NIC, and Salina DPU, designed for advanced AI workloads like model training and inferencing. The MI325X, based on CDNA 3 architecture, features 256GB of HBM3E memory and improved compute performance. Production begins in Q4 2024, with broader availability by 2025. AMD also previewed the MI350 series, expected in 2025, alongside expanded AI networking and software capabilities for optimized data center performance.

AMD has introduced its latest accelerator and networking solutions designed to support the next generation of AI infrastructure. These include the AMD Instinct MI325X accelerators, the AMD Pensando Pollara 400 NIC, and the AMD Pensando Salina DPU. These products are tagged at the growing demand for advanced AI infrastructure in data centers for foundational model training, fine-tuning, and inferencing for AI tasks.

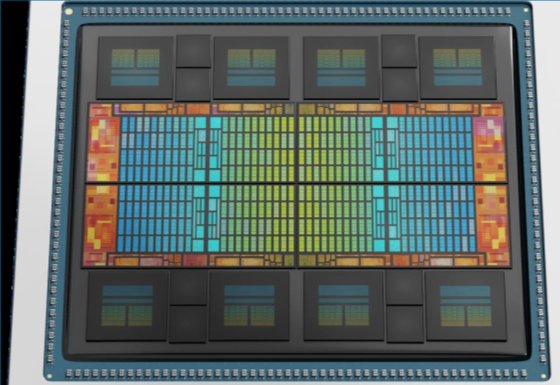

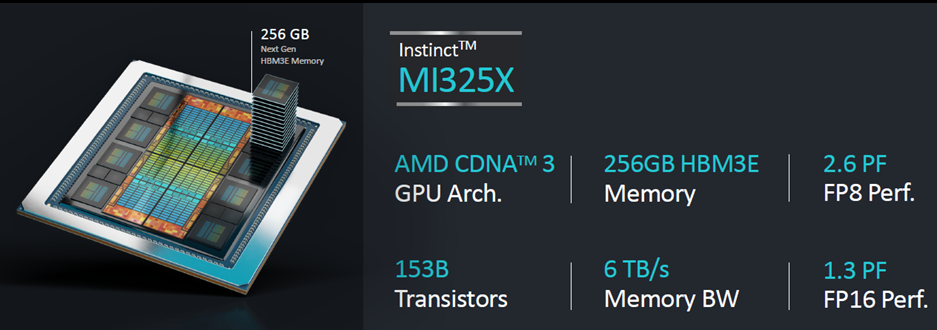

AMD said the Instinct MI325X accelerators, built on the CDNA 3 architecture, are engineered for high performance and efficiency, making them well-suited for complex AI operations. AMD’s goal with the MI325X accelerator is to optimize AI solutions that work effectively across system, rack, and data center environments.

Forrest Norrod, executive vice president and general manager of AMD’s Data Center Solutions Business Group, emphasized that the company’s road map continues to focus on delivering the performance needed to bring large-scale AI infrastructure to market more quickly. Norrod highlighted the role of AMD’s new accelerators, Epyc processors, and Pensando networking engines in creating efficient AI solutions. He emphasized that AMD’s expanded software ecosystem and integration of AI infrastructure contribute to its ability to support AI solutions across various industries.

The AMD Instinct MI325X features 256GB of HBM3E memory, which supports 6 TB/sec bandwidth. This is a significant increase compared to previous models, offering 1.8´ the memory capacity and 1.3´ the bandwidth of the H2001 model. The MI325X also delivers 1.3´ greater peak theoretical FP16 and FP8 compute performance, which enhances the device’s ability to handle demanding AI workloads. It is expected to be available in production shipments by Q4 2024, with widespread system availability from platform providers such as Dell Technologies, Lenovo, Hewlett Packard Enterprise, and others by early 2025.

Looking ahead

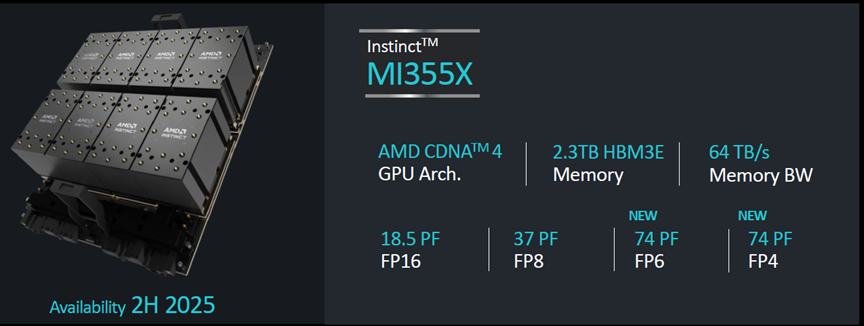

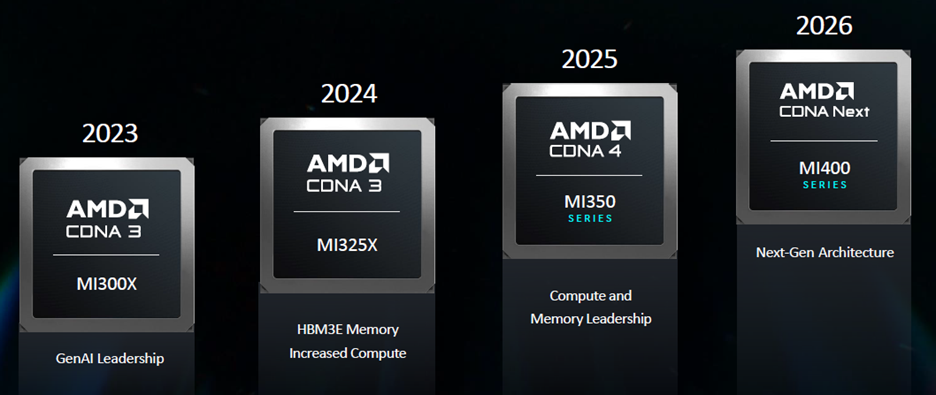

AMD also previewed its next-generation AMD Instinct MI350 series accelerators, which are expected to offer a 35-fold improvement in inference performance over the MI325X model. These CDNA 4 GPUs will be equipped with 288GB of HBM3E memory, will support the new FP4 and FP6 formats, are built on a 3nm process, and are expected to become available in the second half of 2025.

AMD gave a peek at their product naming plans for the Instinct AI GPUs going out to 2026. No detailed specifications were revealed, nor should they be, but the company wanted to emphasize that it is committed to a long-term backward-compatible AI accelerator product line. The company claims that although it didn’t introduce an AI-specific GPU until 2017, its AI data center business has grown to $2.28 billion, 50% of the company’s revenue in Q2’24.

In addition to its new accelerators, AMD is introducing networking solutions that focus on optimizing AI infrastructure. These include the AMD Pensando Salina DPU and the AMD Pensando Pollara 400 NIC. The Salina DPU is designed to manage front-end AI networking, which involves delivering data and information to AI clusters. It improves performance, bandwidth, and scalability by offering up to twice the performance of its predecessor and supports 400G throughput, optimizing performance for data-driven AI applications.

The Pollara 400, meanwhile, focuses on back-end AI networking, where it manages data transfer between accelerators and clusters. As the first AI NIC ready for the Ultra Ethernet Consortium (UEC) standard, the Pollara 400 is equipped with AMD’s P4 programmable engine and supports next-generation RDMA software. It is expected to play a key role in improving performance and scalability in accelerator-to-accelerator communication within AI infrastructure.

Both the Salina DPU and Pollara 400 NIC are currently being sampled by customers and are expected to be available in the first half of 2025.

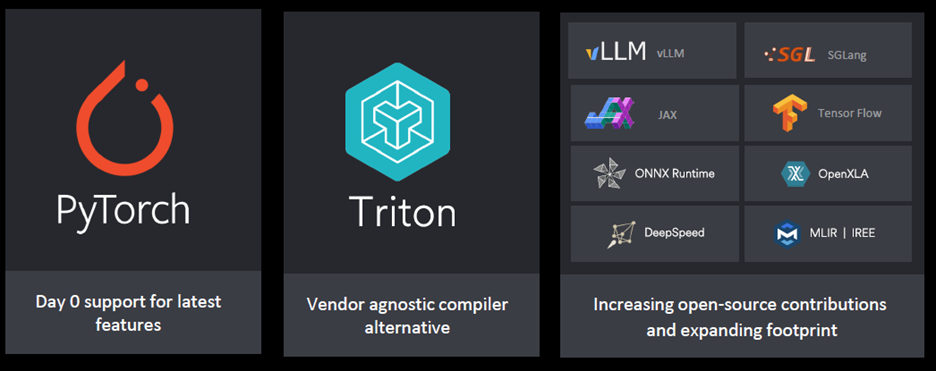

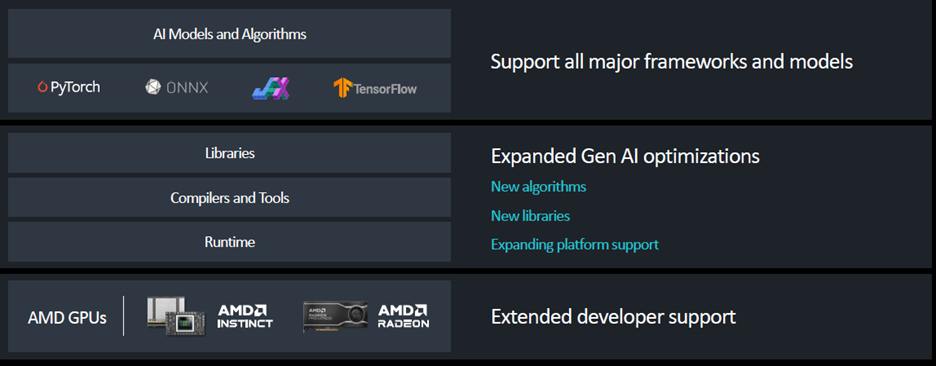

AMD is also expanding its AI software capabilities, with a focus on advancing the ROCm open software stack. This stack supports popular AI frameworks, libraries, and models like PyTorch, Triton, and Hugging Face.

The latest update, ROCm 6.2, includes new features such as support for FP8 datatype, FlashAttention-3, and Kernel Fusion, which enhance both training and inference performance for generative AI workloads. Compared to ROCm 6.0, ROCm 6.2 delivers up to a 2.4-fold improvement in inference performance and a 1.8-fold improvement in training for a variety of large language models.

These advances in hardware and software demonstrate AMD’s continued commitment to providing tools that improve the efficiency and scalability of AI infrastructure. By introducing new accelerators, DPUs, NICs, and software enhancements, AMD aims to meet the growing demand for high-performance AI systems across multiple industries. The company is positioning itself as a key player in the AI infrastructure market, helping customers deploy cutting-edge AI solutions that can handle the most demanding tasks.