Apple’s Machine Learning Research team developed an AI model called Depth Pro, which generates 3D depth maps from 2D images, enabling rapid and detailed monocular depth estimation. Collaborating with Cornell University, researchers introduced Depth Pro, which synthesizes high-resolution, sharp depth maps with metric precision without needing camera metadata. It features a multi-scale vision transformer, processes images quickly, and supports zero-shot learning for broader adaptability. This technology allows accurate real-world measurements, which is useful for applications like augmented reality. The model’s code and weights are available on GitHub, and a demo is hosted on Hugging Face.

Apple’s Machine Learning Research team has created an AI model that generates 3D depth maps from 2D images using Depth Pro technology, enabling fast and detailed metric monocular depth estimation.

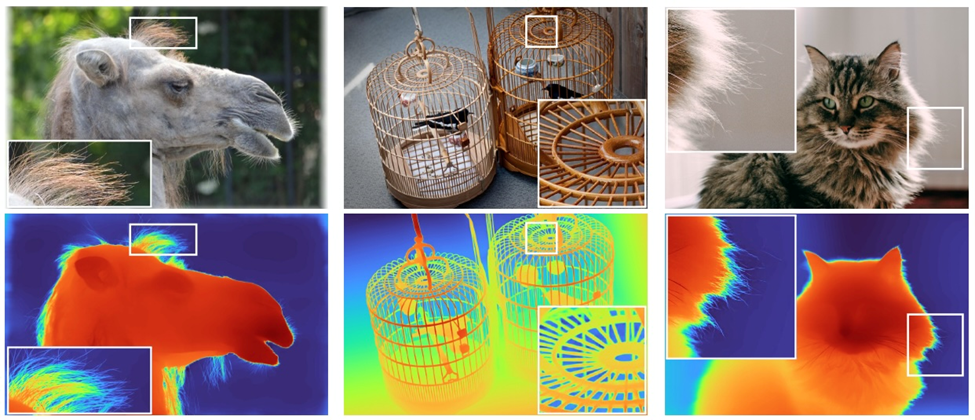

The researchers at Cornell University have presented a foundation model for zero-shot metric monocular depth estimation. Their model, Depth Pro, synthesizes high-resolution depth maps with remarkable sharpness and high-frequency details. The predictions are metric, with absolute scale, without relying on the availability of metadata such as camera intrinsics. The model is fast, producing a 2.25-megapixel depth map in 0.3 seconds on a standard GPU. Those characteristics are enabled by several technical contributions, including an efficient multi-scale vision transformer for dense prediction, a training protocol that combines fundamental and synthetic datasets to achieve high metric accuracy alongside fine boundary tracing, dedicated evaluation metrics for boundary accuracy in estimated depth maps, and state-of-the-art focal length estimation from a single image. The researchers said experiments analyzed specific design choices and demonstrated that Depth Pro outperforms prior work along multiple dimensions. They released code and weights at GitHub.

Depth Pro was introduced in “Depth Pro: Sharp Monocular Metric Depth in Less Than a Second,” by Aleksei Bochkovskii, Amaël Delaunoy, Hugo Germain, Marcel Santos, Yichao Zhou, Stephan R. Richter, and Vladlen Koltun.

The AI model’s architecture features a multi-scale vision transformer, which processes an image’s overall context and fine details, such as hair, fur, and other intricate structures. This enables the model to estimate relative and absolute depth, providing real-world measurements.

For instance, this capability allows augmented reality apps to position virtual objects in physical spaces accurately.

The AI achieves this without requiring extensive training on specialized datasets, instead utilizing zero-shot learning. This approach used machine learning to enable the AI model to recognize and categorize unseen classes without labeled examples.

This flexibility makes the AI model adaptable to various applications.

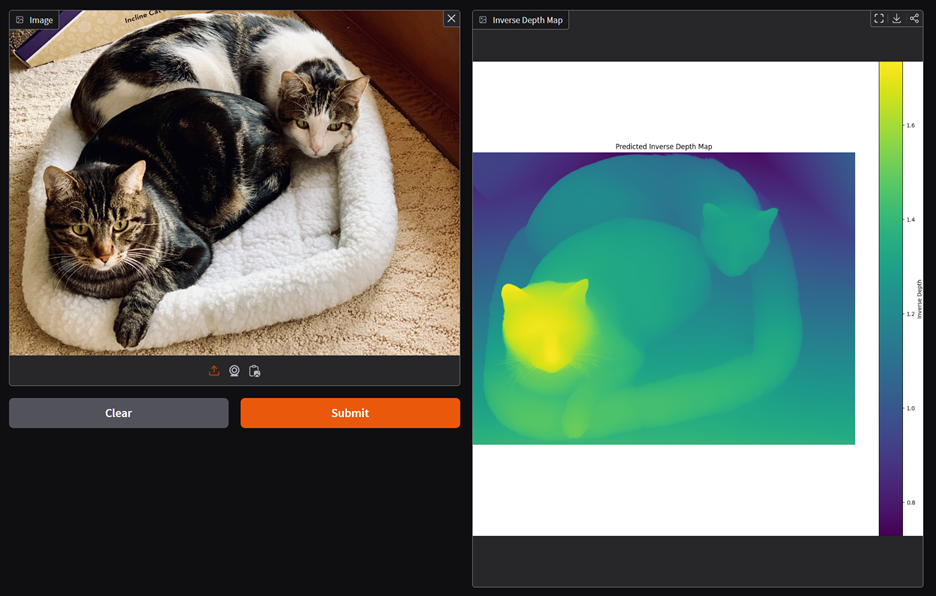

Depth Pro is a fast metric depth prediction model. Upload an image to predict its inverse depth map and focal length. Large images will be automatically resized to 1536´1536 pixels.

Hugging Face has an online demo of the program figuring out depth.

Since all AI is based on cats, I tried the software using my cats, and generated a promising hot-spot depth map.

The code is here.

It’s an interesting approach, but one of our colleagues thinks they’d do better to include a laser-based 3D camera module. Too many start-ups have been making them, and many are going out of business. They could buy, and probably are buying, IP very cheaply now.