Since the beginning, computer technology has been the lifeblood of gaming—from single-player to multi-player, from arcade games to consoles titles, PC games, and those on mobile phones. The look of games has changed over the years, as have the platforms, and today, the GPU is found at the heart of every gaming device.

Gaming has been a significant part of computer graphics since the beginning. It uses all the elements of CG, from CAD and 3D modeling to simulation and rendering. Gaming draws on so many aspects of computers and programming that the list is almost endless.

Gaming is not limited to a single player on a single device, but extends to many players in different locations on various devices. The first multi-player games came into existence in the late 1970s and were known as MUDs, short for multi-user dungeons. They were served on internal networks, also known as LANs (local-area networks). Online gaming involving the Internet came into being in the early 1990s when, in 1991, America Online (AOL) introduced the first multi-player online role-playing game, Neverwinter Nights.

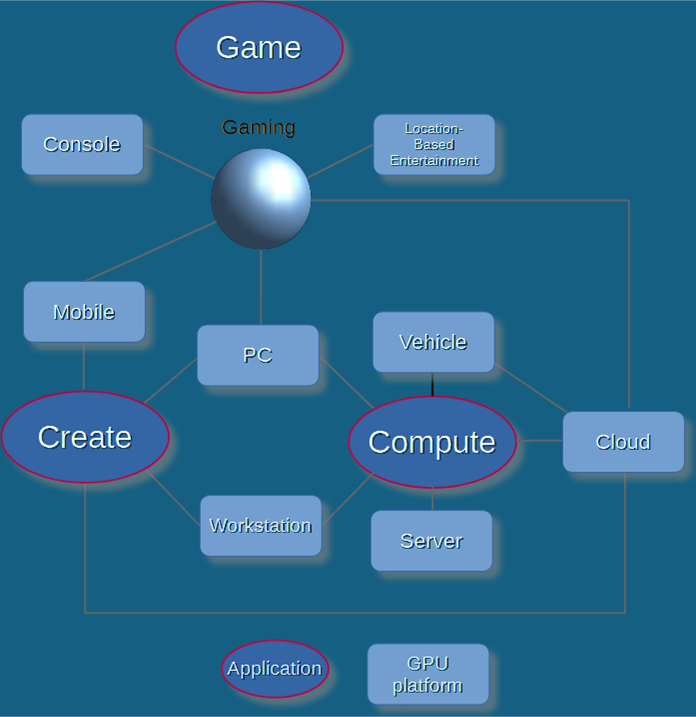

The first computer game is generally considered to be Spacewar!, which was developed in 1962 at MIT. The first arcade game (now known as location-based entertainment—LBE) was Computer Space, released in 1971 by Nolan Bushnell and Ted Dabney, the founders of Atari; The Magnavox Odyssey was the first commercial home video game console, released in 1972. The first game on a PC was Microsoft Adventure, released in 1981 for the IBM PC. And the first game on a mobile phone was a pre-installed version of Tetris on the Hagenuk MT-2000 in 1994.

All of those platforms have migrated to the GPU as the gaming processor of choice.

Games are also created on GPUs located on local workstations, campus servers, and cloud-based data centers. And AI can be found in almost all of them.

GPUs are not only ubiquitous in gaming, but they have also become synonymous. The GPU was developed to provide the much-needed parallel processing capability required by gaming and aided and abetted by Moore’s law and very large-scale integration. That enormous processing power did not go unnoticed by data scientists, and soon they were employing GPUs as computer accelerators. That, in turn, lit up the imagination of AI scientists and led to the revolution and explosion in AI. You could say GPUs made AI—at the very least, they enabled or empowered it.