In 1983, NCR formed a team of six to design a graphics controller that could be used in NCR products and be sold to other companies. The team designed a two-chip approach: a graphics controller and a memory interface controller tied together by a propriety pixel bus. The team incorporated a frame buffer and text by using a dual-text mode technique, allowing the generation of ASCII text via a cache-memory drawing technique using variable memory-word width with a two-stage color lookup table. The team chose the Graphics Kernel System and embedded 25 GKS graphics, timing, and control commands in the 7300 graphics controller. NCR’s midrange chip was overspec’d, as it could drive a 1024×1024 display with an 8-bit frame buffer, and it could run at an impressive 3.75 MIPS and drive displays at 30MHz. However, NCR was the only major customer for the chip, and that wasn’t enough to sustain development. The 7300 became the 77C32BLT and was used on Puretek graphics AIBs through 1987.

In the 1960s, NCR, a multibillion-dollar computer systems and semiconductor manufacturer, established its microelectronics laboratory in 1963 in Colorado Springs, Colorado, to stay abreast in the emerging semiconductor industry. By 1968, NCR’s first MOS circuits were produced, and by 1970, a complete family of circuits had been designed and incorporated into NCR products.

By the mid-1980s, the PC market had pretty much formed its structure; low-end, entry-level systems were used for clerks and simple data entry, consumers bought midrange systems, and gamers and power users bought high-end systems. Above that, a niche segment—the workstation. There were lots of opportunities, differentiation, and challenges. APIs were in flux, the bus wars raged, and displays expanded in resolution. There were only eight graphics controller suppliers, but the population was swelling rapidly and would hit 47 in 10 years.

What a perfect time to enter the market, grow with it, and help shape it. And with a recognizable and respected brand like NCR, the company’s entry seemed like a slam dunk.

In 1983, NCR pulled together a tiger team of six and charged them with designing and producing a graphics controller the company could use and others would also buy. The team’s biggest challenge was picking the segment and specifications. PCs were just getting bit-mapped graphics and a more extensive color range, text was still essential, and somehow all that had to be handled simultaneously and efficiently.

The development costs wouldn’t be cheap, and chip manufacturing was expensive, so the team decided to shoot for the midrange to get the best ROI.

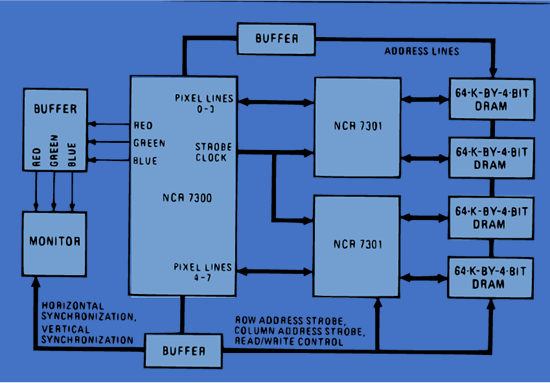

Very large-scale integration (VLSI) was still in its early stages, and there was just so much that was practical to put in one chip. Therefore, the team decided on a two-chip approach—a graphics controller (the 7300) and a memory interface controller (7301), tied together by a propriety pixel bus with clock, as depicted in Figure 1.

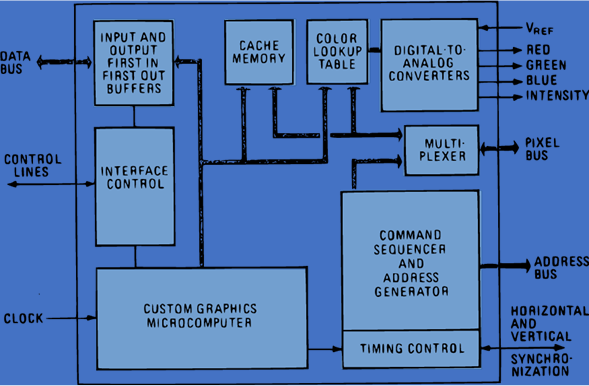

The team was able to combine graphics features incorporating a frame buffer and text by using a clever dualtext mode technique that allowed the generation of ASCII text via a cache-memory drawing technique using variable memory-word width with a two-stage color lookup table.

Since there were few standards, the team chose the GKS (Graphics Kernel System) from National Standards Institute’s standard introduced in 1977. The team was able to embed 25 GKS graphics, timing, and control commands in the 7300, more than proprietary Hitachi’s HD63484 or NEC’s 7220. That gave the 7300 built-in compatibilities with any software applications that used GKS and the PC. NCR also developed a driver that could run under Unix or DOS.

In those days, text operations were still more predominant, and many graphics chips of the time incorporated a character generator to speed up text generation. NCR put the character generator in an unused section of the frame buffer, a technique used in CAD applications for display lists. Placing the character library in the frame buffer as bit maps gave the user a scaling capability for the character size. Those bit-mapped characters could then be accessed (using 8-bit codes), eliminating the bottleneck of passing images between the host and the 7300.

The 7300 had independent first-in, first-out (FIFO) input and output registers. Both were 16 bits wide and 16 words deep. Its internal architecture was 8-bit, but the interface logic could work with 8- or 16-bit processors. Its FIFO registers were used to transfer pixel data and commands. The controller used the command register to specify the bus’s data width (8 or 16 bits) and handle the initialization handshake signals for DMA transfers. The status register held the FIFO register status and indicated when the output FIFO register was empty. The generalized block diagram of the 7300 controller is shown in Figure 2.

The 7300 featured an on-chip programmable color lookup table with overlay mode. That was usually a feature found in separate LUTDAC (lookup table, digital-to-analog converter) chips. The 7300 preceded the wildly popular Acumos and Cirrus Logic GD5400 series that introduced such a feature in 1991. Not only that, the 8-bit frame buffer, also a first, could drive a 1024×1024 display, if one had such a thing in those days. Workstation chipsets had such features, so NCR’s midrange chip was overspec’d in that sense.

The 7300/7301 chipset was built in a 2000nm dual polysilicon NMOS process—state of the art for the day. And even though the team employed conservative design rules, the controller could run at an impressive 3.75 MIPS and drive displays at 30MHz.

At the time, the company said it planned to integrate the 7300 and 7301 into a single chip, which would have been the logical thing to do—and in 1987, filed a patent. But NCR was the only major customer for the chip, and that wasn’t sufficient volume to sustain the development. The number of competitors entering the field was increasing rapidly, and prices were dropping just as fast, as too many companies chased too few customers. The 7300 became the 77C32BLT and was used on Puretek graphics AIBs through 1987. It was also used on MacroSystem’s Altais AIB for the DraCo Computer (Amiga 060 Clone). Single-chip designs from the competition outpaced and outpriced NCR, and it quietly withdrew from the market.

Then and now

NCR became a significant computer supplier providing everything from PCs to workstations, minicomputers, and mainframes. By 1986, the number of US mainframe suppliers shrank from eight (IBM and the “Seven Dwarfs”), to six (IBM and the “BUNCH”), and then to four: IBM, Unisys, NCR, and Control Data Corporation.

On September 19, 1991, AT&T acquired NCR for $7.4 billion, and by 1993, the subsidiary had a $1.3 billion loss on $7.3 billion in revenue. The losses continued, and AT&T was NCR’s biggest customer, accounting for over $1.5 billion in revenue.

AT&T sold the NCR microelectronics division in February 1995 to Hyundai. At that time, it was the largest purchase of a US company by a Korean company. Hyundai renamed it Symbios Logic.

In 1996, following a spin-off of the NCR group, NCR re-emerged as a stand-alone company. Today, NCR is a large enterprise technology provider for restaurants, retailers, and banks. It is the leader in global POS software for retail and hospitality, and provides multivendor ATM software.