Share your GPU, use your colleagues

We truly have entered the era of ubiquitous computing. It started in the 1960s with time-share computers, expanded in the late ’70s with the commercialization of the APRANET into the Internet, and further developed in mid-2000s as the concept of the cloud became universal. The final step was virtualization, and specifically virtualization of the GPU.

Virtualization of the GPU has had many fathers. The first commercially available example of it was 3Dlabs’ virtualization of code space in the GPU in 2004 in the Wildcat VP. Also in 2004, Imagination Technologies enabled a single core to virtualize multiple processors and to manage and process the real time and the concurrent tasks that result. The idea caught Justin Rattner’s attention, and at the 2005 Intel R&D Vision, conference he challenged the audience with the question, “Can we fully virtualize the platform? What does it take to virtualize the subsystem, the storage, display, processor, etc.?” Then he showed two (virtualized) systems using one IGC with the display and a window in it, and said, “Each application thinks it owns the display.”

The seeds were sown

In 2006, AMD, Intel, and OEMs including Dell, HP, and IBM joined the Distributed Management Task Force to develop a standard management API. It also signaled the return of the thin client. Powerful multi-core processors for servers enables managed clients, which will make sense in a wide range of enterprises, and especially education.

Lucid Logix demonstrated a virtualized GPU at CES 2011 in a private suite, and most of the industry seemed to miss that revelation.

Then at Nvidia’s GTC conference in May 2012, Jen-Hsun visibly relished the opportunity to introduce VGX, codenamed Monterey, and show how it enabled the GPU to be virtualized.

Dirty little secret

Nvidia’s demonstration of their VGX technology at GTC tore the covers off the dirty little secret the computer industry would have liked to keep under wraps as long as possible: VDI tech-nologies are going to enable ubiquitous computing wherever we are. SGI predicted the future close to 20 years ago as they demonstrated Grid Computing and the ability to virtualize graphics so that any device can access and allow interaction with graphics-heavy data in the network—the bandwidth just wasn’t there at the time.

Today the ability to virtualize graphics opens up the opportunity to share the graphics resources among users dynamically. For instance, if three users are slaving away on spreadsheets and one user is working on Adobe’s After Effects, the majority of the graphics resources can go to the After Effects user.

The users want it

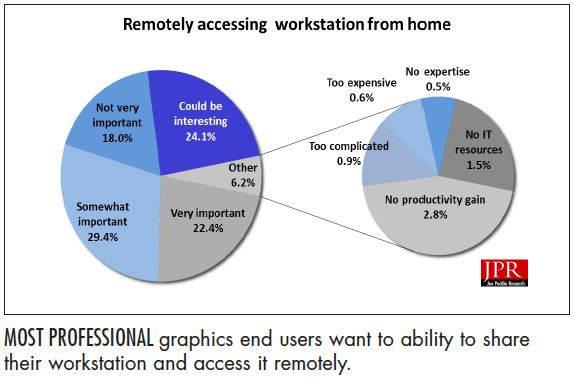

We recently conducted a survey of end users of professional graphics and asked them if they thought having remote access to their workstation, or allowing a colleague to access it, was in-teresting, important, or wouldn’t offer any productivity gain. Over 75% of the 2,370 respondents said they thought it was either very important or interesting. Six percent responded with “Other,” and the majority of those said they didn’t have the expertise, or IT sup-port to do it. An average of 19% said it wouldn’t be important.

We also asked the respondents if they knew the GPU could be shared, and got a surprising result—45% of them did know it.

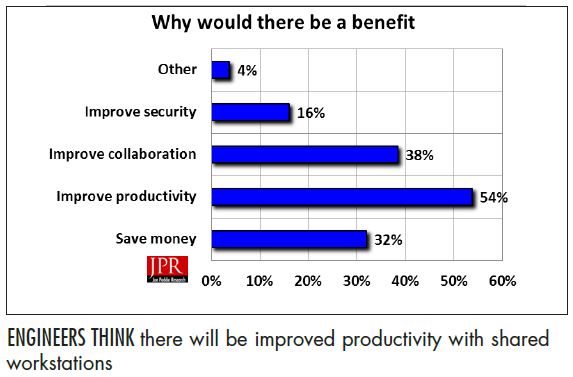

And when we asked where there would be a benefit, productivity and collaboration were the most significant areas.

I was asked by a journalist the other day, Why would companies like AMD and Nvidia promote and support this idea of virtualizing the GPU; wouldn’t it reduce their sales?

No it won’t, it will actually expand sales, and sales of high-end GPUs. Why? Because now, all those companies that couldn’t (or wouldn’t) spend the money, $1,500 to $10,000 each, to equip all of their engineers with workstations can now give them workstation performance at a fraction of the cost. As for the others who already buy workstations, they’re not going anywhere, they buy those machines for a reason, and their demands are only going up.

We’ll be discussing this at our Siggraph press luncheon. And in late October we’re holding a conference for end users on remote graphics and virtualization. This is a hot topic and you need to get up to speed on it.