At Siggraph 2024, Nvidia made several major announcements, mostly involving NIM (Nvidia Inference Microservice), a set of accelerated inference microservices that enable organizations to easily build AI apps and run AI models on Nvidia GPUs just about anywhere. To this end, Nvidia announced generate AI models and NIM microservices for OpenUSD language, geometry, physics, and materials. Additionally, the company announced NIM-related news pertaining to Hugging Face Inference-as-a-Service running on Nvidia DGX Cloud as well as the role of NIMs in Shutterstock’s new Generative 3D service. And, Nvidia announced a new AI Foundry service and NIM microservices for building custom Llama 3.1 generative AI models for enterprises. There’s also new NIMs for digital humans, digital biology, and robotics.

NIMs are not new, but they are proliferating and gaining in popularity, thanks to the meteoric rise of generative AI.

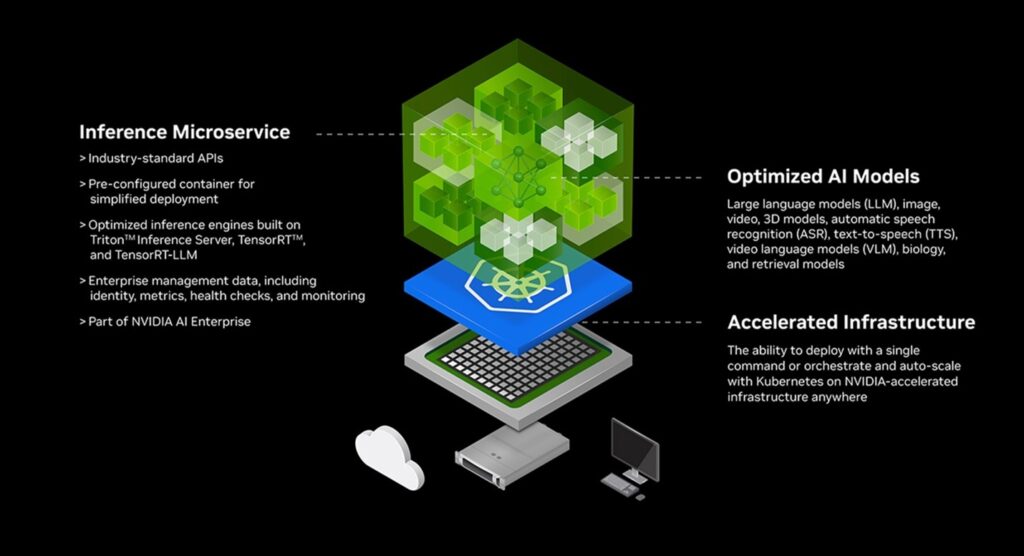

NIMs, or Nvidia Inference Microservices, is a set of accelerated microservices and the way in which Nvidia optimizes and packages AI models, making it easier and faster for developers to build AI-powered enterprise applications and deploy AI models using Nvidia GPUs in the cloud, data centers, and GPU-accelerated workstations. NIM—part of Nvidia AI Enterprise—is a comprehensive software technology solution built for applications at scale. Packaged within a NIM container are optimized AI models, optimized inference engines, domain-specific code and libraries, and industry-standard APIs, running out of the box across Nvidia GPUs.

Nvidia began building NIMs in its Nvidia AI foundry for providing an end-to-end solution for creating customized generative AI models. Two months ago, Nvidia opened the door for developers to download its NIM inference microservices, with more than 40 Nvidia and community models available. Today, there are over 100 preview NIMs available across many domains and modalities, with more being added constantly. Every Monday, Nvidia launches a new AI model during what it calls Model Mondays.

Some of the more significant recent releases include one to power Hugging Face Inference-as-a-Service running on Nvidia DGX Cloud. During Siggraph, Shutterstock released its Generative 3D service in commercial beta that lets users quickly prototype 3D assets and generate 360-degree HDRI backgrounds that light scenes, all from just text or image prompts. The service is built with Nvidia’s visual AI foundry Edify architecture and packaged with NIM microservices. Nvidia also just launched a new AI Foundry service and NIM microservices with the Llama 3.1 collection of available models. The Llama model collection is one of the NIM microservices for understanding, which Nvidia has just released, in addition to NIMs for digital humans, for digital biology, and for robotics.

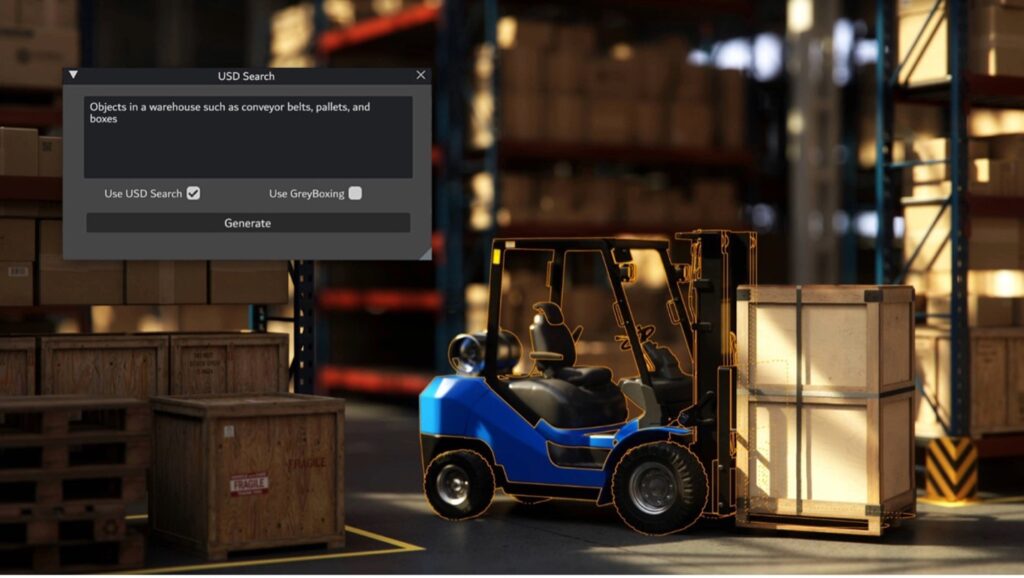

These NIM microservices can understand OpenUSD-based language, geometry, materials, physics, and spaces, said Rev Lebaredian, vice president of Omniverse and simulation technology at Nvidia. The three NIMs available now in preview and available on the Nvidia API catalog are:

- USD Code— For answering OpenUSD knowledge questions and generating OpenUSD Python code.

- USD Search—For searching through massive libraries of OpenUSD 3D and image data using natural language or image inputs.

- USD Validate—For checking the compatibility of uploaded files against OpenUSD release versions and generating a fully RTX-rendered path-traced image using Omniverse Cloud APIs.

Additional NIMs for OpenUSD will be available soon, including:

- USD Layout—Which assembles OpenUSD-based scenes from a series of text prompts.

- USD SmartMaterial—Which predicts and applies realistic materials to a 3D object.

- FVDB Mesh Generation—Which generates an OpenUSD-based mesh rendered by Omniverse APIs from point cloud data.

- FVDB Physics Super-Res—Which performs AI super-resolution on a frame or sequence of frames to generate an OpenUSD-based high-resolution physics simulation.

- FVDB NeRF-XL—Which generates large-scale neural radiance fields in OpenUSD using Omniverse APIs.

The company also announced two new portals that expand OpenUSD to two new

industries: robotics and industrial simulation data formats. As a result, users will be able to stream large, fully RTX ray-traced datasets to the Apple Vision Pro. These tools and APIs are available in early access.

Lebaredian noted that until recently, digital worlds have been primarily used by creative industries, but with the enhancements and accessibility that NIM microservices are bringing to OpenUSD, all kinds of industries are able to build physical-based virtual worlds and digital twins as they prepare for the next wave of AI—robotics.

“The generative AI boom for heavy industries is here,” he added.

According to Lebaredian, Nvidia has built the first phase of an OpenUSD connector to Unified Robotics Description Format (URDF), the most widely used robotics model format, for design, simulation, and reinforcement learning, and for enabling CFD simulations to be rendered in OpenUSD format, he said. The connector is now available with Isaac Sim.

Within the manufacturing sector, Foxconn is using NIM microservices and Omniverse to create a digital twin of a factory that’s under development.

A very different industry user is marketing agency WPP, which has adopted USD Search and USD Code NIM microservices to build an AI-enabled content creation pipeline built on Omniverse.

According to Lebaredian, generative AI for content creation is amazing, but it’s uncontrollable—artists can’t edit finite details exactly how they’d like. Now, with Omniverse and NIM microservices, they can bring control and collaboration to visual GenAI workflows. They can assemble their scene in Omniverse how they want and render the view they want. The 2D render is then fed into Edify 3D or any other image generator, along with a text prompt, resulting in an up-res’d version of the basic stage. If they want to move an object, they go into Omniverse and re-render the view, then re-project the AI.

Nvidia is offering the OpenUSD Exchange SDK, which will enable developers to build their own OpenUSD data connectors, further growing the OpenUSD ecosystem.