Two recent developments in GPU technology have emerged: Alex Fish’s Pescado GPU, built on the Espressif ESP32-S3 microcontroller, and Dylan Barrie’s FuryGPU, utilizing the Xilinx Zynq UltraScale+. Barrie admits his project is a hobby, not a competitor in the GPU market, yet it opens possibilities for innovation. Over nearly four years, Barrie developed his GPU, learning SystemVerilog and FPGA intricacies. Fish’s Pescado GPU incorporates a small display and a 3D graphics and physics engine coded from scratch in C++ and OpenGL. Fish’s project includes joysticks for control and is released on GitHub under an unspecified license.

We’ve tracked DIY graphics controllers and GPUs for quite some time. Like almost every other DIY project, it’s a labor of love, curiosity, and blind determination. In a bit of shameless self-promotion, I direct you to my third book on GPUs—The History of the GPU—New Developments. Chapter 6, Open GPU Projects, chronicles DIY and university projects beginning in 2000. The point is that this is not a new phenomenon, and some really fun developments have come out of it.

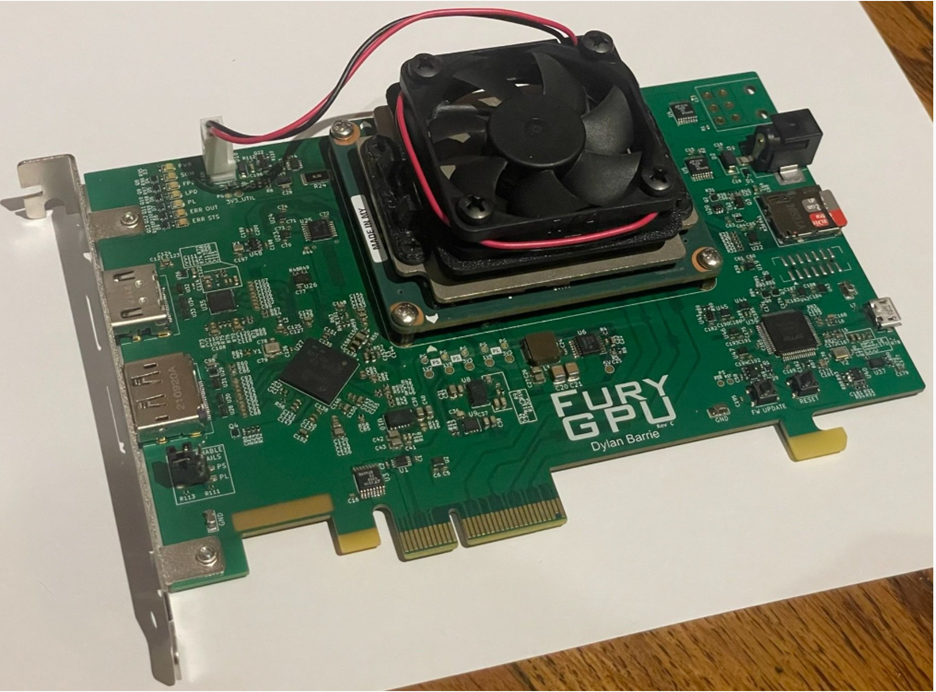

Two recent developments that got into the public information stream are Alex Fish’s Pescado GPU—he built his Pescado 3D Engine from a Espressif ESP32-S3 microcontroller—and Dylan Barrie’s FuryGPU, which uses the Xilinx Zynq UltraScale+.

When questioned about his use of the Xilinx field-programmable gate array (FPGA), Barrie said (on Hacker News), “Let’s be clear here, this is a toy. Beyond being a fun project to work on that could maybe get my foot in the door were I ever to decide to change careers and move into hardware design, this is not going to change the GPU landscape or compete with any of the commercial players. What it might do is pave the way for others to do interesting things in this space.”

On his Web page (cited in the caption above), Barrie states he spent almost four years developing the GPU and stack in his spare time (he has a day job). Nearly four years later, the project turned from a neat little tech demo idea into a full-fledged, real-world, plug-it-into-your-computer GPU. He taught himself SystemVerilog, figured out how FPGAs work, and spent countless hours refactoring, redesigning, and streamlining the design until he could get Quake to render at semi-real-time frame rates. The demo of that can be seen here, and a collection of other videos of it here.

Of all the parts of this project, writing Windows drivers for it was the most painful, said Dylan

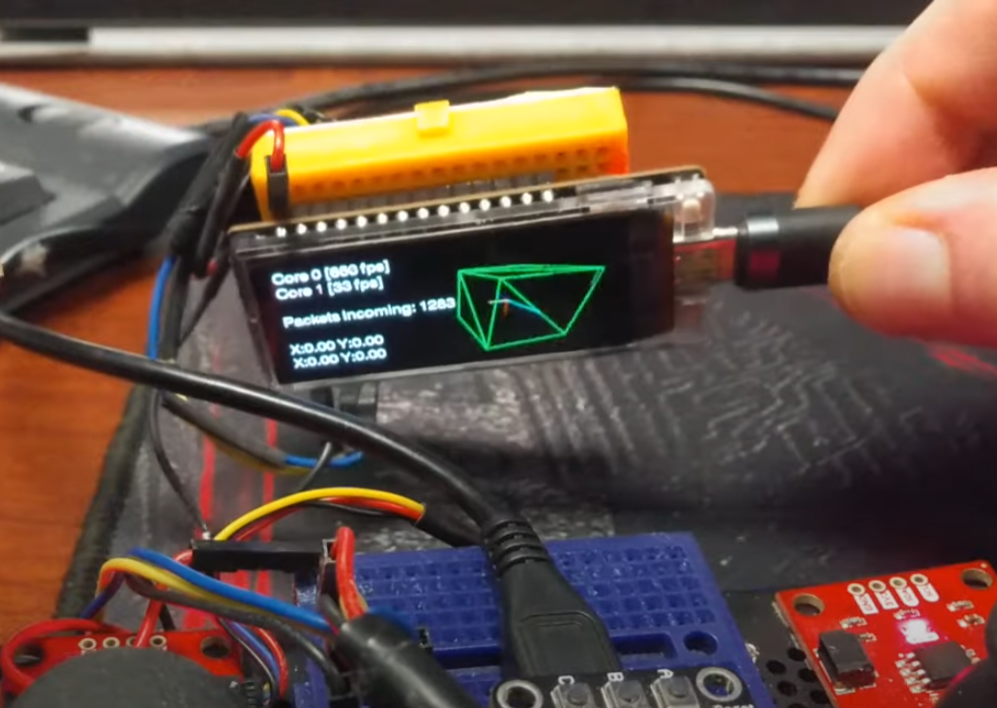

Alex Fish took a slightly different approach with his Pescado GPU and included a small display, which can be seen here.

Fish’s GPU engine is a 3D graphics and physics engine he built from scratch in C++ and OpenGL. All the libraries, including the vector and matrix math libraries, were written from scratch. The physics and geometry processing happens in 3D, but when you want to display the image on your 2D monitor, you can only plot 2D points. Thus, every 3D point is projected and squashed to the screen using a perspective projection matrix and perspective division. Although it looks 3D, it is actually 2D. There is no such thing as 3D graphics.

Points and lines are grouped into triangles. Triangles are grouped into meshes (shapes). These shapes then get placed (transformed) into the world by a model-to-world matrix (TRS). This TRS matrix scales, rotates, and translates every point visible (in that exact order) from local space to world space.

You can get full details on the project here. The board/display is a LILYGO T-Display-S3 ESP32-S3, available here.

Fish also incorporated two SparkFun Qwiic-connected joysticks via a Texas Instruments TCA9548A I2C switch—one of which is worn around the index finger, providing a control system easily accessible by thumb—and a TDK InvenSense MPU-6050 inertial measurement unit. Using them, Fish can control the objects on-screen manually or have them respond to the movement of the device itself.

Fish has released the engine on GitHub under an unspecified license; the OpenGL version of Pescado is also available on GitHub under the permissive Unlicense.

In other GPU news, Intel has released an open-source Linux GPU-compute stack update.

Intel’s latest update to its open-source Compute Runtime and Intel Graphics Compiler (IGC), facilitating OpenCL and Level Zero support on Linux and Windows systems, is available. The release, labeled Intel Compute Runtime 24.09.28717.12, marks the first update since mid-February, bringing significant new code. With over 200 patches, enhancements include performance tuning, bindless mode for Level Zero on DG2 Alchemist, improvements to the Intel Xe DRM driver, new introspection APIs, L3 fabric error reporting, Blender 3D modeling enhancements, cl_khr_extended_bit_ops support for OpenCL, additional PCI IDs for Raptor Lake Refresh, and more. Major focus lies on Intel Xe kernel driver support.