|

Today’s GPUs are capable of amazing things, and as a processor type, have found their way into almost every imaginable electronics device—from cars to rockets, from TVs to smartphones, and of course game consoles and PCS. Yet, the journey that got us to the powerful Nvidia GeForces and AMD Radeons we use today was a long one, and we can thank many determined visionaries with blazing the path forward.

Recently, some of those scientists discussed their early work in a live virtual SIGGRAPH Pioneers Panel called “Chasing Pixels: The Pioneering Graphics Processors,” moderated by Jon Peddie, himself one of the pioneers in the graphics industry, and hosted by Ed Kramer, current chair of the SIGGRAPH Pioneers. As Peddie promised at the start of the presentation, “What you are going to be hearing tonight is an explanation as to how we got the GPU.” The panelists delivered on that promise.

“We take GPUs for granted today. I can assure you they didn’t always exist,” said Peddie, who just wrote a book about graphics processors called The History of the GPU, which will be available later this year. “It was no short trip,” he adds about that journey that led to the GPU.

The work toward GPU development, believe it or not, began back in the 1960s, and after a great deal of work, finally got to the point where we had a fully integrated GPU in the late 1990s! “It took Moore’s law and a lot of hard and creative work to get that integrated chip,” Peddie said.

Nick England and Mary Whitton

The discussion began with Nick England and his partner, Mary Whitton, “early adventurers who didn’t know you couldn’t make a graphics processor with a bunch of TI 7474 chips and just went ahead and did it,” said Peddie. “Theirs were probably the first GPU-like processors that got built with discreet components.”

England recounted the work he and others, including Henry Fuchs, were doing at the University of North Carolina, which included his first programmable graphics processor; it drove a 3D vector display (and a color raster display built at the lab)—all state of the art at the time in 1972, with a fully functioning computer with an integrated 3D display and an auxiliary processor and a minicomputer that drove the color raster display.

By 1976 or so, the group was joined in the lab by Turner Whitted, who was doing curved surface displays—“the kind of graphic you’d really like to do on a graphics processor,” said England, who then built a 32-bit graphics processor that used the AMD 2900 family bit-slice processor, whereby each large chip was 4 bits on the computer. “I tried to do a lot of things at once and not just make a general-purpose computer, but rather one that was kind of optimized for doing graphics and imaging operations,” said England. “I tried to do something that was flexible. It wasn’t a pipeline design, but rather a bus-oriented design.”

Peddie commented that England had invented a VLIW design, one of the first.

|

People who visited the lab were impressed and inquired about having one built for them, so England and Whitton decided to start a company, Ikonas Graphics Systems. Their first mission statement in 1978 was to strive to meet the graphics requirements of advanced research groups. “That’s not a good business plan, folks,” England noted.

Nevertheless, the commercial version of what he had designed at NC State University used 64-bit instructions—it had one routine for drawing vectors that was one instruction loop—not one inside of a loop, but rather the entire loop was a single instruction. “It just repeated over and over again until it jumped to another instruction to repeat it over and over,” England said. “Our idea was to do things for other people like us, researchers and research labs, so we made everything open, all the source code.”

The hardware was also open—people were given the schematics, interface, bare boards. “People built crazy things. We built crazy things,” England added.

|

Whitton, meanwhile, worked on the matrix multiplier and independently programmed the co-processor on the main icon bus—a processor, she said, that could run completely independently. This was her master’s thesis project at NC State University, for which she developed a patch subdivision algorithm.

|

The board (12 inches square with 10 inches square of usable space for logic), with an 8-bit frame buffer, was built in the backroom of their rental house in Raleigh, NC. The prototype was retroactively named RDS-1000 (Rusher Display System-1000). In 1979, they began delivering the RDS-2000. In late 1980, came the RDS-3000, which was about the size of a dorm refrigerator. Despite its size, the Ikonas RDS-3000 was the ancestor of today’s programmable graphics processing units.

Peddie commented that today we call such a matrix multiplier “tensor engines,” and they are the heart of AI training systems.

|

A lot of people doing CGI in the early days used the Ikonas systems, including groundbreakers Robert Abel and Lucasfilm. In 1985, Tim van Hook wrote microcode for solid modeling, so solid regions could be defined within a buffer instead of just the front Z buffer version of it. Van Hook then wrote a ray tracer—and it was done within this graphics processor designed in the late 1970s. Although it did not run in real time, it ran about 100 times faster than any computer at the time, England said.

England, Whitton, and van Hook later started a company called Trancept Systems in 1986. Their goal was to develop a flexible, programmable, high-performance graphics and imaging accelerator for computer workstations. The start-up had a short lifespan, selling to Sun Microsystems a year and a half later. But not before they introduced the TAAC-1 for Sun Microsystems workstations, which consisted of two large PC boards—one full of video RAM, the other full of a micro-programmed wide-instruction-word (200 bits) processor optimized for graphics and imaging operations.

Van Hook eventually became the graphics architect at SGI for the Nintendo 64 platform.

|

|

Henry Fuchs

Around this same time period, a young professor at the University of North Carolina at Chapel Hill had this “crazy idea” that every pixel should have its own processor. “Nuts, right?” said Peddie. “But what Henry [Fuchs] knew that I didn’t was that Moore’s law worked and it was going to keep working. And he had the vision to see into the future that was going to happen. So, he created a hierarchy of processors down to a tiny processor per pixel, eventually. But his team couldn’t wait, so they did it the hard way—they built it—one processor at a time.”

Fuchs, however, interjected that even though the idea started as one tiny processor per pixel, that wasn’t the main idea, because there wasn’t enough power to do real-time rendering.

Fuchs stated that he had a germ of an idea that was picked up by grad student John Poulton, and together they worked from 1980 until the late ’90s. His early work introduced the use of a binary space partitioning tree, and he contributed to the development of Pixel-Plane with Poulton. They worked on rendering algorithms and hardware, including Pixel-Planes (a VLSI-based raster graphics system) and PixelFlow, developed with HP.

Steve Molnar

After a brief statement by Fuchs, Steve Molnar spoke. A grad student at UNC Chapel Hill at the time, he wanted to be part of Fuchs’ Pixel-Planes. He then played a video that offered a glimpse into the work done by the group and a look at the prototype of the Pixel-Planes graphics system at the Micro Electronic Systems Laboratory of UNC Chapel Hill’s Department of Computer Science—a system that rapidly generated realistic, smooth shaded images of three-dimensional objects and scenes.

|

| Pixel-Planes 4 (circa 1986) |

“The machine’s simplest software executed a Z buffer visible surface algorithm from a scene description stored in a conventional display list,” Molnar explained. There was no rendering engine outside the frame buffer that converted a polygon into pixels and then stored each pixel in a conventional frame buffer memory. The display list, its traversal, the geometric transformations, and the conversion to the Pixel-Planes commands were all handled by a pipeline of three commercially available array processors made by Mercury Systems. The Pixel-Planes commands were sent to 2,048 custom memory chips residing on 32 frame buffer memory boards; each board contained 64 custom chips. Each chip contained memory and processing for 128 pixels.

Marc Olano

After more development, Marc Olano, a grad student at UNC from 1990 to about 1998, joined Pixel-Planes after Pixel-Planes 5 was constructed (1989–1990), and he was there for the development of PixelFlow. His dissertation focused on getting shading languages to run on “these crazy beasts of machinery.”

“What we decided to do to improve the utilization was to tile the screen. So, the next generation, Pixel-Planes 5, was built with a number of tiled rasterizers, each of the rasterizers was 128 × 128 pixels, and it could move around and render any number of 128 × 128-pixel regions of the screen,” Olano said. It would receive the rendering commands and coefficients for pixels, for primitives in a given tile, then it would process all of those, figure out the final frame buffer values for those pixels, send them to a frame buffer, and then move on and do the next one.”

This next system they built was more complicated and had to have more geometry processing. They also began investigating more sophisticated rendering algorithms like Phong shading.

At this point, Peddie pointed out that what this group had accomplished was deferred rendering, which is now a big deal for cloud systems that people use with their smartphones every day. As for tile-based rendering, they beat Microsoft to the punch when Microsoft began working on its Talisman project to build a new 3D graphics architecture based on tiling.

Molnar interjected, referring back to PixelFlow, which had an increased processor width from single bit to 8 bits due to the increased computation. Also, they were doing early real-time programmable shading, and built a floating-point library to code shading algorithms in standard floating-point. It wasn’t always 32 bits, he said, but it was floating-point.

Pete Segal

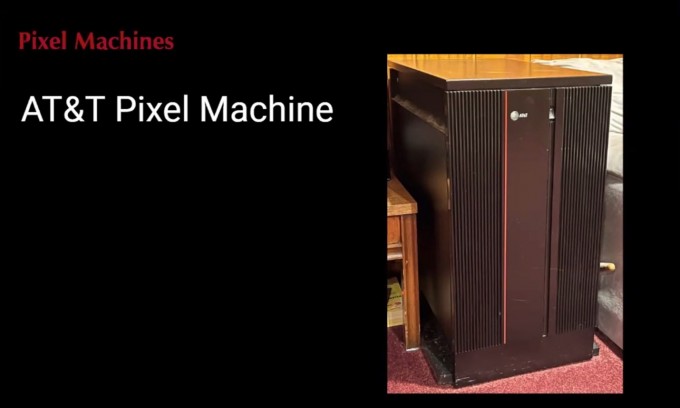

While all the research and development were occurring in North Carolina, a small group within AT&T also thought they could build a super-pixel processor, and Pete Segal became involved in that. The Pixel Machine product debuted at SIGGRAPH 1987; in 1991, they tried to turn it into a PC card graphics accelerator. When AT&T decided there wasn’t enough profit in it, they disbanded the organization and Segal licensed the software and started his own company called Spotlight Graphics. He then licensed it to Bentley Systems, and their raytracing and photorealistic rendering software was in production as part of MicroStation through about 2009. “So, a nice 22-year lifespan on the software, which was pretty cool,” he added.

|

|

Segal detailed the Pixel Machine and its developments, which enabled creation of high-quality graphics for interactive modeling data visualization and realistic rendering.

|

|

Curtis Priem

Eventually, though, these giant machines took advantage of Moore’s law. Curtis Priem was at Sun Microsystems from 1986 to 1993. He and his team’s goal was to take things like what Segal’s team was building (which filled boxes and racks) and cram it into one little board, and then cram that into a Sun system. They succeeded.

Priem, in fact, developed the GX graphics chip at Sun. He also designed the first accelerated graphics card for the PC, the IBM Professional Graphics Adapter, which consisted of three interconnected PCBs and contained its own processor and memory.

|

| IBM’s Professional Graphics Adapter, also known as the Professional Graphics Controller. (Source: Wikipedia) |

For some show-and-tell, Priem held up an image of a board, the IBM Professional Graphics Adapter (PGA), which he noted was the first accelerated graphics card in the PC industry. It was a three-board, two-slot system for the IBM XT and used for CAD mostly.

Priem then took the audience through the early development occurring at SGI, and then detailed the cofounding of Nvidia with Jensen Huang and Chris Malachowsky in 1993. The first board was called the NV1. Eventually, developments led to the RIVA 128, which crushed all benchmarks. As Priem pointed out, it was the first 128-bit 3D processor. Two years later, Nvidia introduced the GPU. He recalls that Dan Vivoli, Nvidia’s marketing person, came up with the term GPU, for graphics processing. “I thought that was very arrogant of him because how dare this little company take on Intel, which had the CPU,” he said.

Of course, we know what happened next, as Nvidia today is synonymous with the term “GPU.”

Just as Peddie’s book recounting the steps taken to get to the first GPU was “no short trip,” neither, really, was the presentation by these early pioneers. Despite the two hours dedicated to this trip down “memory” lane, it proved to be not enough time, and the participants, as well as the audience, were promised a second part sometime in the future. You can bet they will be held to that promise.

Note: Images in this story are from screen grabs taken during the panel, as presented by the speakers.