Intel plans to grab a piece of the GenAI enterprise market segment with Gaudi 3. Its cited performance looks good, though the numbers have not yet been verified. However, it’s ace is its pricing, which we have just learned is significantly less than rival Nvidia’s competitive offerings. Gaudi 3 is not a traditional GPU, but it is like one, and has a lot of the programmability required for GenAI. However, Intel plans on replacing Gaudi 3 with a true GPU for the next generation, to deliver more programmability.

What do we think? We like Gaudi, which is similar to GPUs in its use of parallel processing and focus on accelerating AI workloads. The differentiation in its architecture, efficiency for AI tasks, networking capabilities, and memory configurations made it an interesting tool for deep learning compared to the more general-purpose design of GPUs.

Intel’s launching of Gaudi 3 shows an aggressive intent to fight for share in the high-end AI space against Nvidia. Pricing will matter, but just as relevant is giving the market a credible alternative, which some will take simply not to be too reliant on Nvidia. Future generations of more GPU-like products will follow and will add vital features, like sparsity support. (However, AMD offers it in its GPU’s Matrix Core technology, and Nvidia offers it in its GPU’s Tensor cores.)

Gaudi 3 pricing is its saving grace

Intel introduced its Gaudi 3 platform at its Vision 2024 event in Phoenix this past April. Gaudi 3 is a key product for competing with Nvidia in GenAI for enterprise. It’s an important step for a product that won’t continue in its current form, with the next generation being a true GPU and future iterations likely to be CPU/GPU combos in a style pioneered by AMD.

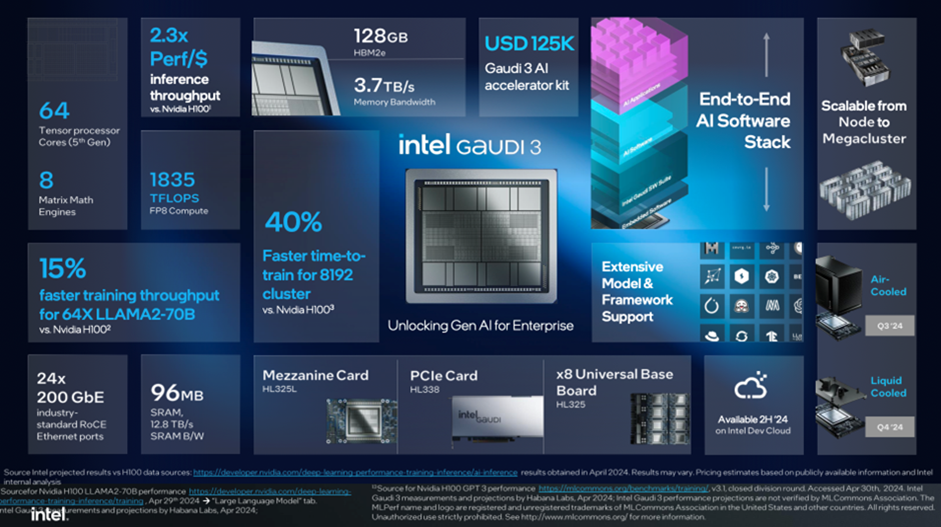

Gaudi 3 comes in three form factors—an accelerator card (HL-325L), a universal baseboard (HL-325), and, for the first time, a PCIe CEM add-in card (HL-338).

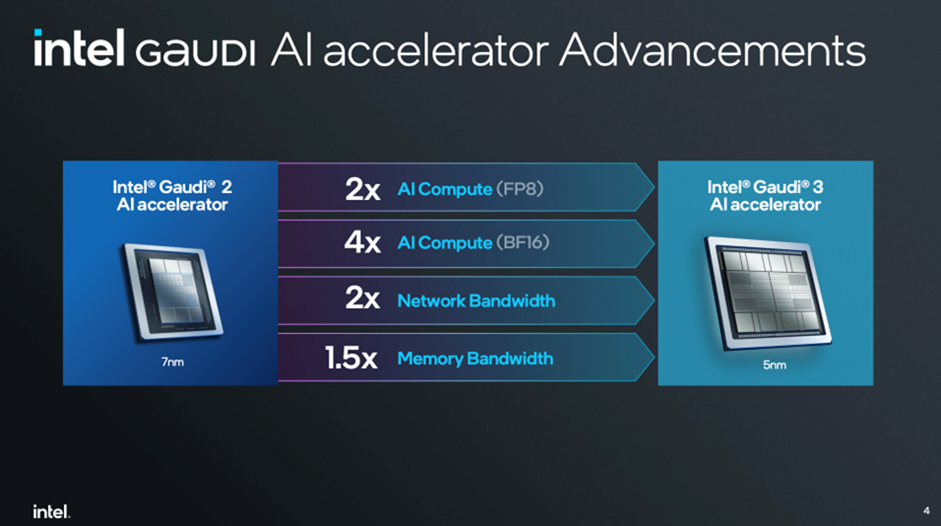

Initially, it looks competitive with a cited 40% faster time to train for an 8,192 cluster Gaudi 3 versus Nvidia H100 (though it’s worth saying that Gaudi 3 performance is not yet verified by MLCommons Association) and 15% faster training throughput for a 64-accelerator cluster versus Nvidia H100 on the Llama-2-70B model.

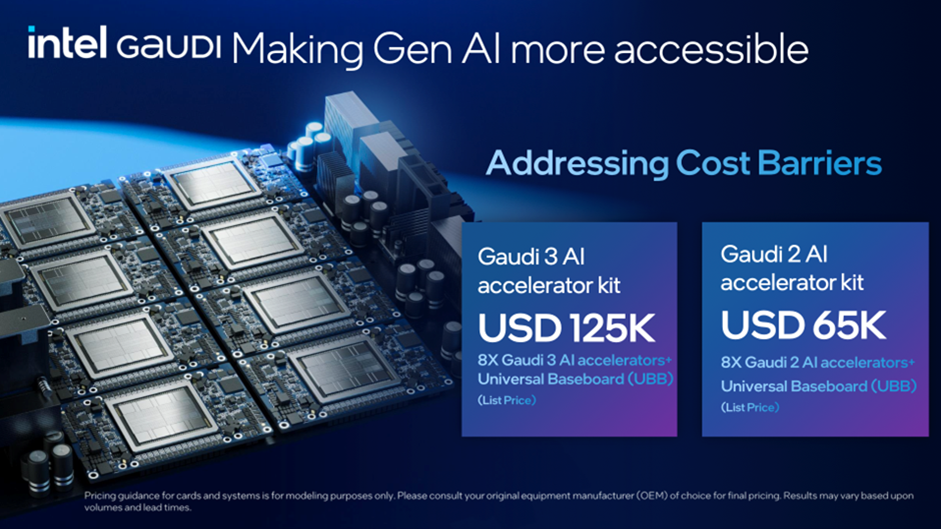

But price is likely to be the deciding factor on whether Gaudi 3 can take off, and now we know where we stand. A kit including eight Intel Gaudi 3 accelerators with a universal baseboard (UBB) will list at $125,000, estimated to be two-thirds the cost of comparable competitive platforms. A kit including eight Intel Gaudi 2 accelerators with a UBB is $65,000, which Intel estimates to be one-third the cost of comparable competitive platforms.

That means Gaudi 3 is selling for significantly less than Nvidia’s competitive platforms, with a price-performance advantage that Intel says is 2.3× performance/dollar versus H100 for inference throughput and 1.9× performance/dollar versus H100 for training throughput.

Anil Nanduri, VP and GM, DCAI category/head of Intel AI Acceleration Office, says that makes Gaudi 3 “an amazing value for compute and a great alternative to existing solutions in market.”

There are already aggressive deals on Gaudi in the market, with Supermicro selling a full Gaudi 2 server for US $90,000 today.

ODM support for Gaudi 3 looks good on paper, with Asus, Foxconn, Gigabyte, Inventec, Quanta, and Wistron expanding the production offerings from current Gaudi system providers Dell, Hewlett Packard Enterprise, Lenovo, and Supermicro. Of course, it remains to be seen how effectively and aggressively they do so.

Where does Gaudi 3 sit in the market?

Intel paints an interesting picture of the AI future as we transition from AI assistants to AI agents, the distinction being that an agent can reason. They argue that GenAI will drive as much a step function change as did the prior Internet and cloud eras.

Intel says Gaudi has been specifically built for GenAI to tackle the enterprise GenAI gap. What they mean by this is that most of the models we encounter today are built on the 5%–10% of data that is publicly Web-crawlable. But Intel says that most data is in enterprises—more than 90%. Enterprises want to unlock this data using GenAI, Intel believes.

Historically, data has been managed by a CPU, but GenAI uses GPUs. Gaudi’s product positioning is based on bridging from CPU to GPU, bringing the two domains together without compromising trust and security.

Apparently, it’s easy. With standard modular hardware and software and multiple vendors, “it just should work,” said Intel’s Nanduri.

Gaudi 3 is not a traditional GPU, but it is like one, and has much of the programmability required for GenAI. Does it have enough? Perhaps not, with Intel planning on replacing Gaudi 3 with a true GPU for the next generation, to deliver even more programmability. Other features missing today include sparsity support, which Nvidia has and is becoming more relevant to delivering performance in some applications.

Gaudi 3 is scalable from a single eight-accelerator server up to tens of thousands of accelerators with a three-switch setup based on simple Ethernet-based networking. That means performance scaling from petaFLOPS to exaFLOPS of compute.

What really matters is performance at scale, and Intel claims 40% faster time to train versus Nvidia H100, based on an 8,192 Gaudi 3 cluster, and a 2× faster inferencing throughput running common LLMs (or 1.5× versus Nvidia H200).

Intel knows that it must quell any doubts about its software ecosystem. Its strategy is to provide an open and easy-to-use software stack under framework of the Linux Foundation. Over 200,000 transformer models on Hugging Face are easily enabled on the Intel Gaudi platform, Intel claims. Intel Developer Cloud has Gaudi available for customers to try with those models.

Gaudi 3 was accelerating Meta Llama 3 on Day 0. Next, RedHat OpenShift AI stack will be enabled on Gaudi and Xeon 6. Intel Labs is creating proof points for GenAI such as multi-modal panorama generation with photorealistic 3D images. The code is on GitHub now.

Gaudi enterprise customers and partners include Bharti Airtel, Bosch, CtrlS, Lumen, Articul8, IFF, Infosys, Landing AI, Naver, and NIQ. Intel gave us a couple of examples of how these customers are using Gaudi today. Ola is building Krutrim, an Indian multi-language model, using Gaudi 2. Seekr is training a trustworthy LLM for Moderna, Shopify, and others.

Gaudi 3 is already sampling for Q3 2024 general availability.