Intel’s new Xeon 6 processor is optimized for AI and edge computing, doubling the core count to 128 with improved performance. Intel is also planning future releases like Clearwater Forest in 2025, built on the advanced 18A process node. At Hot Chips 2024, Intel highlighted the Xeon 6’s capabilities for edge-specific tasks and introduced an optical compute interconnect chiplet for AI infrastructure. These developments are essential parts of Intel’s strategy to stay competitive in the rapidly evolving AI landscape.

What do we think? AI is something like two years away from mainstream acceptance. We’ve seen the advent of the AI PC to deliver consumer options that elevate productivity, creativity, gaming, entertainment, and security. Next is the focus on how businesses can leverage the power of edge computing and AI to improve decision-making, increase automation, and gain value from proprietary data.

Intel’s Xeon 6 serves as a successor to Xeon 5, featuring up to 128 cores—double the count of the previous generation. It incorporates Redwood Cove cores that support AMX FP16 acceleration, which is essential for various AI tasks. Built on the Intel 3 process node, the company claims this new architecture offers 18% more performance at the same power level and 10% greater density. While it appears to be a robust option for edge computing, it is clearly a transitional product. Intel must introduce something revolutionary by 2026, as AI performance will be crucial for the company’s future success.

What we know so far is there will be a new E-core Xeon product, Clearwater Forest, in 2025, which will utilize the Intel 18A process node and deliver even higher core counts. Intel says this product is booting and now in use inside Intel. Intel CEO Pat Gelsinger says the 18A process node performance is “a little bit ahead” of TSMC’s N2 but will deliver earlier (TSMC counters by saying its 3nm is as good). Anyway, Intel 18A will need to deliver significantly better transistor and chip performance if the company wants to be in the AI race.

Intel at Hot Chips

At Hot Chips 2024, Praveen Mosur, Intel fellow and network and edge silicon architect, unveiled new details about the Intel Xeon 6 system-on-chip (SoC) design and how it can address edge-specific use-case challenges such as unreliable network connections and limited space and power. Intel is calling Xeon 6 “the company’s most edge-optimized processor to date,” emphasizing the importance of the edge to Intel’s fight back against Nvidia. That statement was made based on knowledge gained from more than 90,000 edge deployments worldwide.

The single-system architecture with integrated AI acceleration enables scaling from edge devices to edge nodes for managing full-AI workflows, from data ingest to inferencing for improved decision-making.

The Intel Xeon 6 SoC combines the compute chiplet from Intel Xeon 6 processors with an edge-optimized I/O chiplet built on Intel 4 process technology.

Features of the Xeon 6 SoC include:

- Up to 32 lanes of PCIe 5.0.

- Up to 16 lanes of Compute Express Link (CXL) 2.0.

- 2´ 100G Ethernet.

- Four and eight memory channels in compatible BGA packages.

- Edge-specific enhancements, including extended operating temperature ranges and industrial-class reliability, making it ideal for high-performance rugged equipment.

Intel’s Xeon 6 SoC also includes features designed to increase the performance and efficiency of edge and network workloads, including new media acceleration to enhance video transcode and analytics for live OTT, VOD, and broadcast media; Intel Advanced Vector Extensions and Intel Advanced Matrix Extensions for improved inferencing performance; Intel QuickAssist Technology for more efficient network and storage performance; Intel vRAN Boost for reduced power consumption for virtualized RAN; and support for Intel Tiber Edge Platform, which allows users to build, deploy, run, manage, and scale edge and AI solutions on standard hardware using a cloud-like model.

4 Tbps optical compute interconnect chiplet for XPU-to-XPU connectivity

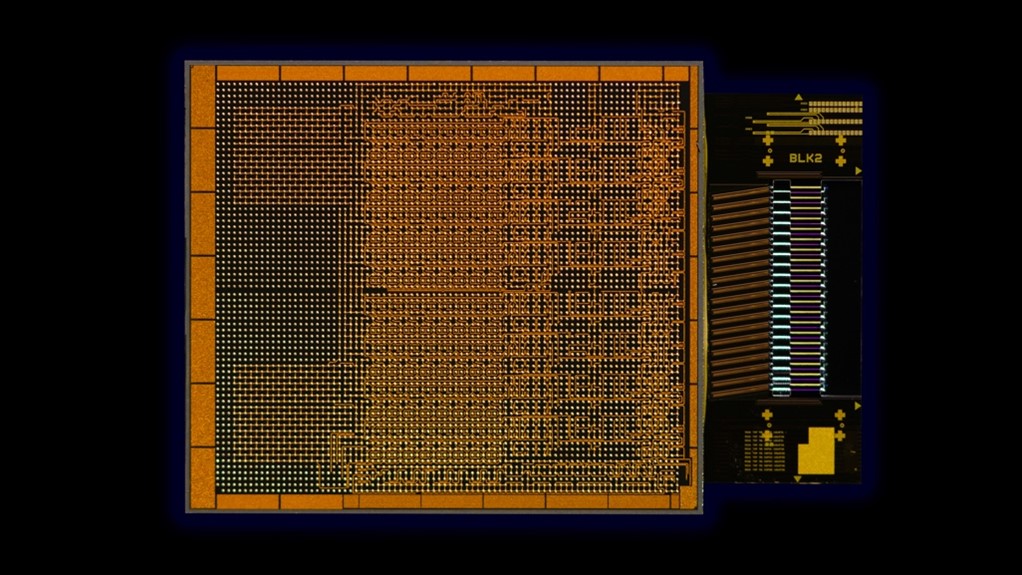

Also at Hot Chips, Intel’s Integrated Photonics Solutions (IPS) Group demonstrated a fully integrated optical compute interconnect chiplet co-packaged with an Intel CPU and running live data.

Saeed Fathololoumi, photonic architect in the IPS Group, covered the OCI chiplet and its design to support 64 channels of 32 Gbps data transmission in each direction on up to 100m of fiber optics, to address AI infrastructure’s growing demands for higher bandwidth, lower power consumption, and longer reach.

This high-bandwidth interconnect targets scalability of CPU/GPU cluster connectivity and novel computing architectures, including coherent memory expansion and resource disaggregation in emerging AI infrastructure for data centers and high-performance computing (HPC) applications.

Intel also presented Lunar Lake and Gaudi 3 at Hot Chips, which we already covered in depth from Computex. Panther Lake, the Lunar Lake successor, is due in 2025, and the company says the platform is already booting and running Windows inside Intel.