Intel has shipped 5 million AI PCs since announcing them in December, with CEO Gelsinger forecasting 40 million by year-end and over 100 million next year. The commercial launch of the Core Ultra processor in February contributed to this growth, with 500 AI models optimized for Core Ultra. Second-generation AI PC CPUs with 3× performance are shipping, followed by third-generation processors like Arrow Lake and Panther Lake. Gelsinger predicts that by 2026, 50% of Edge devices will involve ML and AI. Intel is standardizing micro scaling formats with Arm, Nvidia, and Qualcomm to improve latency and accuracy. Gaudi 3, Intel’s AI processor, offers benchmarked performance for training LLMs and features enhanced networking capabilities. Intel plans to integrate Gaudi 3 into AI systems next year, along with scalable Ethernet solutions.

What do we think? Intel is on the road to recovery. Its fabs are getting back up to speed, and at a contemporary node, its processors are world-class. The company does understand, and always has, the IT environment, and it is bringing a dedicated AI processor to the market.

Intel puts Nvidia on notice, reveals three AI processors

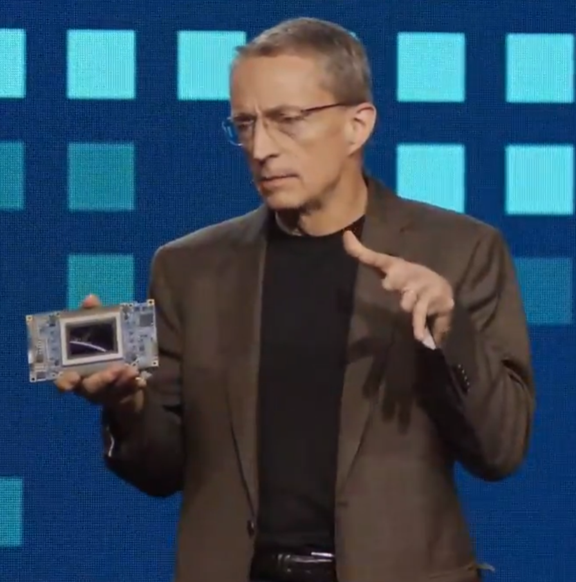

At its Intel Vision conference in Arizona, CEO Pat Gelsinger announced the company’s AI PC, Xeon 6, and Gaudi 3 AI processors. The presentation, aimed at CIOs, CEOs, and IT managers, focused on Intel’s latest offerings and how they will enable an alternative to Nvidia’s solutions for AI training and inference processing in the cloud and on the edge.

Five million AI PCs have shipped to date since Intel made the announcement in December, and Gelsinger forecasted 40 million will have shipped by the end of the year; over 100 million will ship next year.

That is, and will be, partially due to the February launch of the commercial version of the Core Ultra processor. The jubilant CEO said 500 AI models are now optimized for Core Ultra, and while others are getting started, Intel is shipping (or soon will be) its second-generation AI PC CPUs with 3× performance (over last gen), 100 platform TOPS, and 45 NPU TOPS. It will be followed by Arrow Lake, Panther Lake, and Lunar Lake—the third-generation processors are in the fab, said Gelsinger.

The killer app for the edge is AI. In other words, if you can’t say AI, don’t bother speaking.

Gelsinger predicts that by 2026, 50% of edge devices will involve ML and AI, compared to 5% today. This will be propelled by the employment and deployment of retrieval-augmented generation (RAG), which employs current user data and not just stuff an LLM finds on the Web that may not be up-to-date.

Next week at the AI Open Source Summit, Intel will reveal some aspects.

Gelsinger then proudly showed a wafer of Xeon 6 (Granite Rapids) processors built on Intel’s three nodes. It will go into production this quarter and be capable of running models with up to 70 billion parameters while using IEEE 754 floating point accuracy.

Working with Arm, Nvidia, and Qualcomm to standardize micro scaling formats within the Open Compute Project MX alliance, Intel is helping to standardize the next-gen data types for training and inferencing. The new Xeon 6 processors support the MXFP4 format without sacrificing accuracy, improving latency by 3× moving to gen 4 4-bit (192ms) from gen 4 16-bit (544ms), and another 3× on gen 5 4-bit (159ms) or 6.4× on gen 6 4-bit (88ms).

Gelsinger then introduced Intel’s AI processor, Gaudi 3. It is, said Gelsinger, the only benchmarked alternative to Nvidia for training LLMs.

He also made a big point about its network fabric and said the Ultra Ethernet Consortium is standing up to proprietary systems (i.e., Nvidia’s Spectrum-X platform).

Intel, said Gelsinger, will offer AI NICs and chiplets from the foundry. “We will offer ref design from PC to cloud.”

Intel will be adding Gaudi to AI systems next year with scalable Ethernet.

Gelsinger said Gaudi 3 achieves four times the AI compute compared to Gaudi 2, using BF16 (bfloat16—brain floating point), twice the performance using FP8, has twice the networking bandwidth, and 1.5× as much memory bandwidth.

Citing benchmark test versus Nvidia’s H100, Gelsinger said the Gaudi 3 is 50% faster in time to train, 50% better on inferencing, 40% better on inference power efficacy, and uses industry-standard Ethernet

As a final zinger, he added, “The industry is moving away from CUDA models.”

This quarter, Intel will introduce three industry form factors to OEMs: the Mezzanine accelerator card, a PCIe card, and the eight AIB Baseboard (shown above), which has 14.6 FP8 PFLOPS, 1TB HBM3, and 192 Ethernet connections. The Mezzanine card offers 1.8 PFLOPS FP8, with 128GB memory in 600W TDP.

This, said Gelsinger, eliminates vendor lock-in with standard Ethernet and an open software environment.

He closed by saying that the industry will need 10,000 times the computing power this decade.