Intel announced its Gaudi 3 AI accelerators are now available on IBM Cloud. The accelerators offer increased AI compute for BF16 and improved memory bandwidth compared to prior Intel models. This launch supports Intel’s competition with Nvidia in the AI workload market. Systems incorporating Gaudi 3 from various hardware vendors are expected in the second quarter. Intel claims Gaudi 3 provides inference performance and power efficiency advantages over Nvidia’s H100 at a reduced cost.

At the Intel Vision 2025 conference, Intel announced the availability of Intel Gaudi 3 AI accelerators on IBM Cloud. These accelerators are designed to power AI systems and offer 4x higher AI compute for BF16 and a 1.5x rise in memory bandwidth over previous models. The launch of Gaudi 3 is part of Intel’s plans to compete with Nvidia in the AI training and inference workload market. Intel said its Gaudi 3 AI accelerator will be available in the second quarter with systems from Dell Technologies, HPE, Lenovo, and Supermicro.

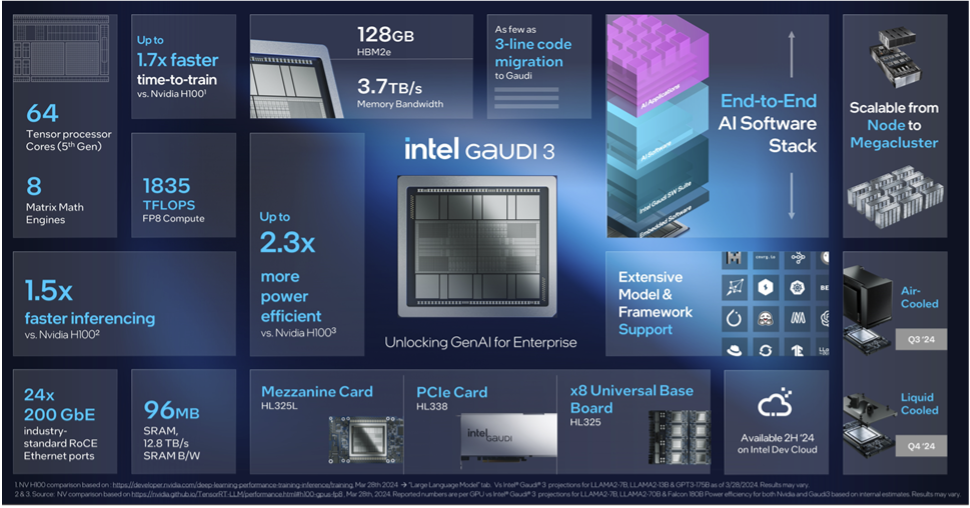

According to Intel, Gaudi 3 has 50% average better inference and 40% better average power efficiency than Nvidia H100, with lower costs. It’s worth noting that Nvidia has outlined its Blackwell GPUs and accelerators that leapfrog H100 performance.

Intel Gaudi 3 is manufactured on 5 nm process and uses its engines in parallel for deep learning compute and scale.

Gaudi 3 has a compute engine of 64 AI custom and programmable tensor processor cores, eight matrix multiplication engines, and a memory boost for generative AI processing. There are 24gigabit Ethernet ports integrated into Gaudi 3 for networking speed, and PyTorch framework integration and optimized Hugging Face models.

Gaudi 3 AI accelerators on IBM Cloud are currently available in Frankfurt (eu-de) and Washington, DC (us-east) IBM Cloud regions, with future availability for the Dallas (us-south) IBM Cloud region in Q2 2025.

LIKE WHAT YOU’RE READING? INTRODUCE US TO YOUR FRIENDS AND COLLEAGUES.