Demonstrations of Nvidia’s digital human technologies got quite a bit of attention during Siggraph 2024. A demo with Looking Glass called Magic Mirror illustrated futuristic videoconferencing in the present day. Nvidia also introduced James, an interactive digital human based on a customer service workflow using Nvidia ACE. New ACE and Maxine capabilities were introduced and used in a number of demos.

We’ve seen some amazing futuristic videoconferencing technology used in sci-fi shows and films in the 1960s and ’70s, from Star Trek and Star Wars to the Jetsons. You might recall that in 1989’s Back to the Future Part II, the future Marty McFly was terminated from his job on a Zoom-like call. In real life, we’ve witnessed an increase in the use of videoconferencing, or video chats, no doubt fueled during Covid. This surge has prompted some companies to come up with new and better methods of conducting this type of communication.

At Siggraph 2024, Nvidia and holographic display company Looking Glass gave onlookers something to call home about, showing off their most recent 3D videoconferencing research in the Nvidia Innovation Zone, using each other’s newest offerings. The demo was called Magic Mirror. The setup was simple, as is the technology behind it in terms of its usage, while under the hood, it is a completely different story.

Magic Mirror’s components included a basic webcam, Nvidia’s Maxine AI developer platform, Nvidia Inference Microservices (NIMs), and Looking Glass’s spatial displays. Nvidia RTX 6000 Ada GPUs are used as well.

Maxine is a suite of high-performance NIMs and SDKs for deploying AI features that enhance audio, video, and AR effects for videoconferencing and telepresence. Part of Nvidia AI Enterprise, NIM microservices are easy to use and, says Nvidia, provide secure and reliable deployment of high-performance AI model inferencing across clouds, data centers, and workstations.

In the demo, Nvidia highlighted Maxine’s latest advancements of cutting-edge AI features that developers can use to enhance audio and video quality as well as enable AR effects. This includes Maxine’s advanced 3D AI capabilities in Maxine 3D, Maxine Video Relighting, and Maxine Eye Contact NIM, as well as Audio2Face-2D.

Available soon in early access, Maxine 3D uses AI, neural reconstruction, and real-time rendering to convert 2D video portrait inputs into 3D avatars in real time. It also harnesses NeRFs to reconstruct detailed 3D perspectives from single 2D images, says Nvidia. The end result is the integration of highly realistic digital humans in videoconferencing and other two-way communication applications.

In early access at this time, Audio2Face-2D, meanwhile, animates static portraits based on audio input, creating dynamic, speaking digital humans from a single image.

In the Magic Mirror demonstration, these advanced 3D AI capabilities were used to generate a real-time holographic feed of users’ faces on Looking Glass’s 16- and 32-inch group-viewable holographic displays, which recently began shipping.

“Nvidia Maxine brings us closer to realizing the dream I’ve had since the founding of Looking Glass: virtual teleportation between physical spaces,” said Shawn Frayne, co-founder and CEO of Looking Glass. “With Maxine, we now have the ability to transform any 2D video feed into immersive, high-fidelity 3D holographic experiences—without complex camera setups. The simplicity of what this technology enables pairs perfectly with the ethos of Looking Glass—making 3D more accessible for everyone, without having to gear up in headsets.”

This was not the first time that Siggraph attendees got to experience this type of technology. Last year, in a back-to-the-future moment at Nvidia’s Emerging Technologies section, Nvidia Research demonstrated an AI-based 3D holographic booth using its single-shot NeRF technology along with a Looking Glass 32-inch holographic display. This 2023 demo reconstructed and autostereographically displayed a life-size talking head using consumer-grade compute resources and minimal capture equipment.

The Siggraph 2024 Magic Mirror was just one demonstration being showcased by Nvidia. In addition to Maxine, the company showed off upgrades to its ACE digital human technologies, which produce a more immersive virtual customer experience when engaging with hyperrealistic and interactive digital avatars.

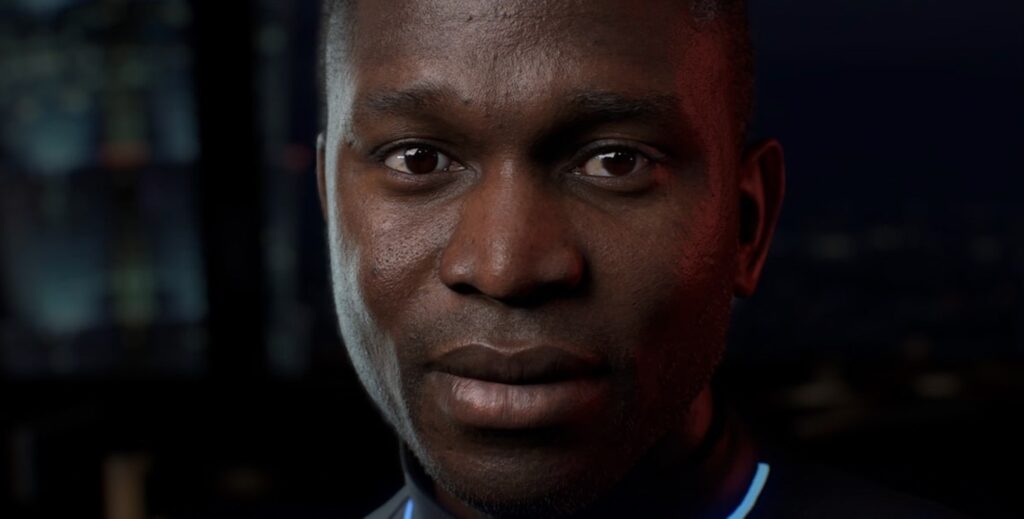

At the conference, Nvidia also previewed James, an interactive digital human based on a customer service workflow using Nvidia ACE. Built on top of NIM microservices and powered by Nvidia RTX rendering technologies, James not only looks lifelike but also provides context-accurate responses using retrieval-augmented generation (RAG). ElevenLabs gave James his voice.

Nvidia additionally teamed up at the show with other companies that are using ACE and Maxine to drive digital humans.