At Computex 2024, Nvidia showcased its latest advancements in AI technology, featuring AI PCs with NIM capability and new GeForce RTX laptops from various manufacturers. Updates on Grace Blackwell GB200 and NVL2 GPUs were highlighted, along with the future release Rubin with HBM4 memory in 2026. Nvidia emphasized AI innovations such as the RTX AI Toolkit and Nvidia ACE, aiming to enhance generative AI applications. With over 200 tech partners integrating NIM into their platforms and accessibility through the Nvidia AI Enterprise software platform, Nvidia’s impact on industries like customer service chatbots, supply chain copilots, and digital human avatars is anticipated (albeit not highly—who loves a chatbot?

What do we think? So, okay, this Computex looks like a just-enough effort from Nvidia. We learned the name of the next GPU, Rubin. MGX adoption has hit 25 partners and 90 systems in 2024. You can buy the Nvidia GB200 NVL2 in an MGX configuration. You can remix games with RTX Remix. Software partnerships are progressing with VLC and DaVinci Resolve. SFF-ready cards for gaming enthusiasts have lots of OEM support.

But, it’s basically a victory lap. Even AMD and Intel are going to support the MGX architecture. They don’t need more than this today.

Nvidia is confident but news is thin

Despite putting out nine press release, making the opening keynote, and being everywhere at Computex, Nvidia’s announcements for the show seemed very much “just another day” at Nvidia, with an emphasis on more information about already announced initiatives, like NIM, and products. Notably for us, Blackwell’s successor got a name and a date.

There are new GeForce RTX laptops from Acer, Asus, MSI, etc. (we particularly admire the Copilot+-capable Asus TUF A14 Zephyrus G16 and ProArt PX13/P16). That’s a big part of how Nvidia is rising to the challenge of “delivering AI models to 1 billion Windows users.” Unlike Intel, which is clearly annoyed at not being more prominent in Microsoft’s Copilot+ launch, Nvidia is just behaving like they invented it. Heck, they give the strong impression that they created AI, but the message that these machines can run CUDA will take some testing by AMD, Intel, and Qualcomm, which all have serious NPUs in their AI PC offerings.

In Blackwell news, the Grace Blackwell GB200, announced back in March, is now in production. It is, as we have already reported, a beast. If you don’t need 72 GPUs, there is now the Nvidia NVL2, a block of just two Blackwell GPUs.

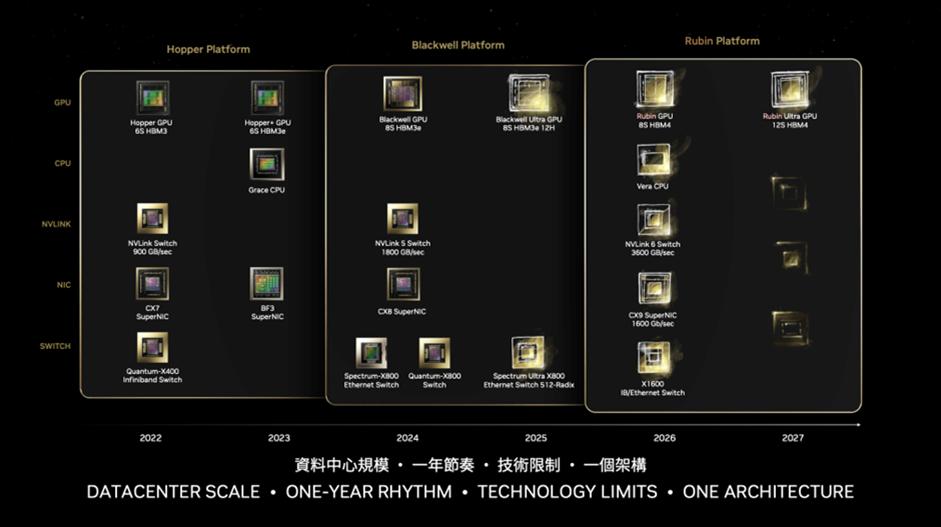

We do now know that Blackwell will be followed in 2026 by Rubin, with HBM4 memory, and that both families will get Ultra versions as midlife kickers. Rubin is expected to debut in Q4 2025 (R series) and H1 2026 (DGX/HGX), with the Ultra not appearing until 2027.

Meanwhile, Nvidia plans to enhance its Grace CPU in a module, incorporating two R100 GPUs and a Grace CPU utilizing TSMC’s 3nm process rather than the current 5nm.

There was a lot about switches in Nvidia President Jensen Huang’s Computex keynote too, with super fast Ethernet.

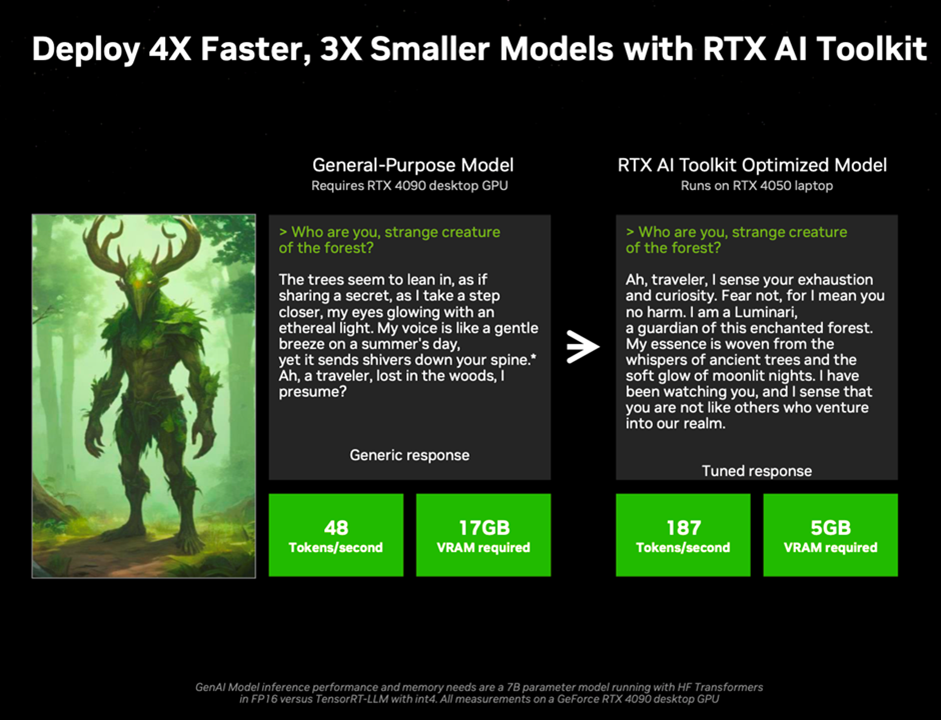

“Developers need fine-tuning techniques for LLMs, a way to optimize the model, then a way to get it to people,” Nvidia says. So they are making “people” you can use to access the AI. First, Nvidia is launching RTX AI Toolkit, a set of models, tools, and an inference manager to deploy.

Nvidia says you can deploy 4× faster and 3× smaller models with the RTX AI Toolkit—models that are capable of running on a laptop, not a desktop.

Next up is Nvidia ACE, the suite of Nvidia Inference Microservices (NIMs) that bring GenAI NPCs/GENAI applications to life—this has been seen before in Nvidia’s ramen shop demo from last year, but now there are digital human NIMs running on AI PCs with the same level of precision and accuracy as in the cloud (and presumably better latency).

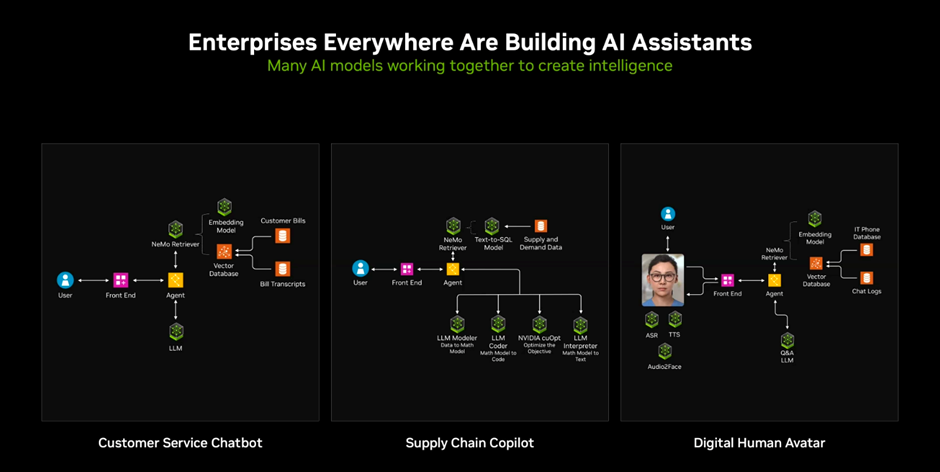

These optimized containers can run on clouds, data centers, or workstations, enabling the quick creation of copilots, chatbots, and more.

By utilizing multiple models for text, images, video, and speech generation, NIM enhances developer productivity and infrastructure efficiency. Over 200 technology partners are integrating NIM into their platforms to accelerate generative AI deployments for various applications.

NIM can be accessed through the Nvidia AI Enterprise software platform, with free access for Nvidia Developer Program members for research and testing purposes starting next month.

How will this change industry? Customer service chatbots, supply chain copilot, digital human avatars. Imagine everything from gremlins to therapists living in your AI PC.

Nvidia has lots of demos from Aww, Inworld, OurPalm, Perfect World Games, and others.

Nvidia NIMs are very focused on gaming. Depth is a hallmark of gaming, says Nvidia, but it can be off-putting to the gamer (catch-22). Nvidia Project G-Assist will enable AI assistants for gaming to help surface that depth, for example, with context-aware game guidance or performance optimization and system tuning.

Nvidia says data processed by an LLM connected by a RAG (Retrieval-Augmented Generation), basically a custom addition to the LLM, will enable gamers to get the most out of their game time.

And just as NPCs are becoming unique AI beings, data centers are apparently becoming AI factories, unlocking $100 trillion industries—everything from animation to DNA modeling.

Nvidia is keen to remind you of its heritage. Blackwell is 1,000× more performant than Pascal eight years ago, and the energy required per token has dropped 45,000× in eight years too, Nvidia says.

It’s the confident way of talking about the future that you might expect from the world’s third-biggest technology company, and if it feels a little hyperbolic, we should probably all give them a pass. Nvidia has seldom put a foot wrong. Okay, supply chain management might sometimes be a bit iffy, and the Arm thing was chaos, but overall, Nvidia took the right bets, made the right products, and sold them with unparalleled drive. No change is expected.