Nvidia has introduced the GB200 NVL4 Superchip, expanding its AI hardware offerings alongside the H200 NVL PCIe module for standard server configurations. The GB200 NVL4 Superchip features four B200 GPUs and two Grace CPUs, doubling processing resources and supporting high-performance computing and AI workloads. It boasts 1.3TB of coherent memory and Nvidia’s fifth-generation NVLink interconnect for high-speed communication. The H200 NVL PCIe module, available in December, offers an air-cooled design, PCIe 5.0 connectivity, and an NVLink interconnect bridge for connecting multiple GPUs. It provides 900 GB/sec bandwidth per GPU and suits enterprise data centers with specific cooling and power constraints. Though slightly less performant than its SXM counterpart, the H200 NVL delivers improved AI and HPC workload performance.

At the Supercomputing 2024 conference in Atlanta, Nvidia introduced its Grace Blackwell GB200 NVL4 Superchip, a significant expansion of its AI hardware offerings.

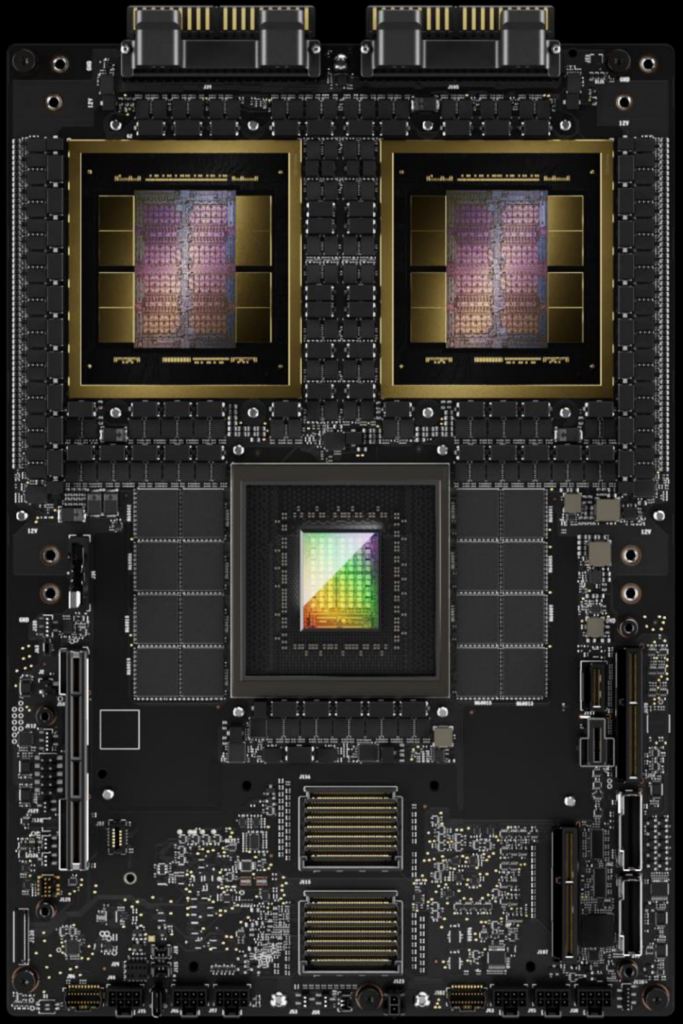

This new product line is built on the Grace Blackwell GB200 Superchip, which the company introduced earlier this year and is destined to be the company’s flagship for AI computing. Alongside this announcement, Nvidia revealed the general availability of its H200 NVL PCIe module, aimed at making advanced AI hardware more accessible to standard server configurations.

Nvidia’s GB200 NVL4 Superchip is engineered for high-performance computing and AI workloads, focusing on single-server solutions. According to Dion Harris, Nvidia’s director of accelerated computing, the chip supports applications like those seen in Hewlett Packard Enterprise’s Cray Supercomputing EX154n Accelerator Blade. This server design accommodates up to 224 B200 GPUs and is projected for release by late 2025.

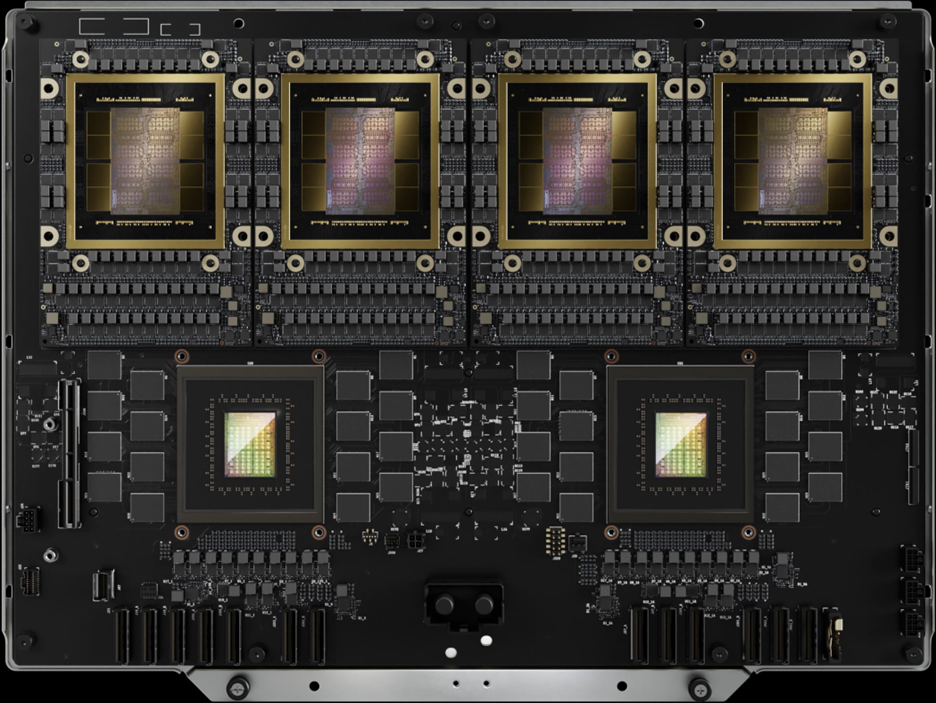

A closer look at the GB200 NVL4 Superchip reveals a substantial leap in design compared to its predecessor, the GB200 Superchip. While the original GB200 featured a compact layout connecting one Grace CPU with two Blackwell B200 GPUs, the NVL4 iteration incorporates two Grace CPUs and four B200 GPUs, doubling the processing resources. This expanded configuration uses Nvidia’s fifth-generation NVLink interconnect, enabling high-speed communication between components at a bidirectional throughput of 1.8 TB/sec per GPU. The system also features 1.3TB of coherent memory shared across the four GPUs through NVLink.

The performance of the GB200 NVL4 Superchip was showcased through comparisons with Nvidia’s GH200 NVL4 Superchip, which uses Grace Hopper architecture. The new NVL4 model demonstrated significant improvements, including (says Nvidia) 2.2´ faster performance on a MILC code simulation workload and 80 percent higher speeds for tasks like training the GraphCast weather forecasting AI model and performing inference with the Llama 2 AI model. Nvidia, however, refrained from releasing additional technical specifications or further performance details.

At SC2024, Nvidia emphasized that its rollout of the Blackwell architecture is progressing smoothly, with partner organizations introducing new products based on this platform. Harris highlighted the reference architecture’s role in helping partners efficiently bring customized solutions to the market.

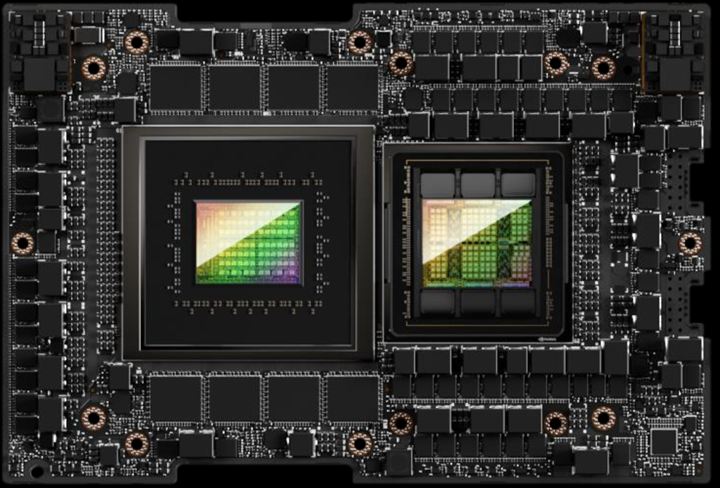

In addition to unveiling the GB200 NVL4 Superchip, Nvidia announced the upcoming availability of the H200 NVL PCIe module, designed to broaden the accessibility of its AI hardware. This module will be launched in December and feature Nvidia’s H200 GPU, which succeeded the H100 earlier this year. The H200 builds on the Hopper architecture, which is central to Nvidia’s dominance in the generative AI chip market.

The H200 NVL PCIe design allows connecting multiple GPUs using Nvidia’s NVLink interconnect bridge, providing a throughput of 900 GB/sec per GPU. This approach evolves from the H100 NVL, which could only connect two GPUs. The H200 NVL is also air-cooled, making it suitable for enterprise data centers with specific cooling and power constraints.

Despite these innovations, the H200 NVL has slightly reduced performance compared to its SXM counterpart. It delivers 30 TFLOPS for FP64 operations and 3,341 TFLOPS for int8 tasks, slightly below the SXM version’s capabilities. This difference stems from the PCIe module’s lower thermal design power, capped at 600W, versus the SXM’s 700W.

Nvidia presented the H200 NVL as an energy-efficient solution for organizations looking to optimize AI and HPC workloads. Its design is tailored for flexible configurations, allowing users to scale from a single GPU to multiple GPUs within a server. Harris pointed out that enterprises can accelerate AI applications, while reducing energy costs, by utilizing this new module.

The H200 NVL also incorporates substantial memory upgrades compared to its predecessor. It features 141GB of high-bandwidth memory and achieves 4.8 TB/sec of memory bandwidth, a notable increase over the H100 NVL’s 94GB and 3.9 TB/sec. This configuration enables improved performance for tasks such as inference on the Llama 3 model and HPC workloads like reverse time migration modeling, where the H200 NVL delivers 70% and 30% faster performance, respectively.

Nvidia’s GPU NVL72 Blackwell is not a halo product, it’s a real product. Its attractiveness is in its ability to deploy quickly. Most will be liquid-cooled, not in conventional data centers but in special places. Demand is being driven to get to a higher-level model, and not just run existing workloads faster. It will have shorter lead times than Hopper POD—the supply chain has been working on getting it out sooner. If there is a heating issue, that won’t hold off shipments. However, it is pushing absolute limits of what can be driven into a data center now, what can go into a rack, a box. This is putting supercomputing in the hands of the masses. Still, it’s a new product; there will be regular new product issues (pushing the limits) but not recall issues. It’s been out for three weeks—give it some time. The US will remain in the lead in adoption because it is more flexible in finding the sources to power the data centers. Nonetheless, the growth rate will have to slow down because the thing being grown is so huge—but it’s not going to collapse.