SPEC, a nonprofit that establishes and maintains benchmarking and tools for computing systems, has released a new benchmark that measures workstation performance. SPECworkstation Version 4.0 is a big step up from the previous Version 3.1, released in March 2021. It provides a more accurate, detailed, and relevant measure of the performance of today’s workstations, especially when it comes to AI/ML.

AI and ML are on the rise across practically every industry, and these technologies are evolving rapidly. As a nonprofit organization that establishes, maintains, and endorses standardized benchmarks and tools to evaluate performance for the newest generation of computing systems, the Standard Performance Evaluation Corporation (SPEC) must keep ensure that its benchmarks keep pace with the constantly evolving technologies that are driving change. To this end, the organization has released the SPECworkstation 4.0 benchmark, which it calls a significant update from version 3.1, which was released in March 2021.

Compared to the SPECworkstation 3.1 benchmark, the SPECworkstation 4.0 benchmark provides a more accurate, detailed, and relevant measure of the performance of today’s workstations, especially when it comes to AI/ML. Such insights can lead to development of more efficient models and, ultimately, the advancement AI capabilities, according to SPECwpc Chair Chandra Sakthivel.

The SPECworkstation benchmark is a comprehensive tool that measures major aspects pertaining to workstation performance. The benchmark provides a real-world measure of the CPU, graphics, accelerator, and disk performance, providing users with the necessary data to make informed decisions about their hardware investments.

Version 4.0 embraces the latest developments in workstation hardware as well as by today’s professional software, as users increasingly add data analytics, artificial intelligence, and machine learning to their workload. These changes are universal and are occurring across a wide range of industries. As such, the benchmark covers the diverse needs of professionals across seven different industry verticals such as AI and machine learning, energy, financial services, life sciences, media and entertainment, product design, and productivity and development.

“Workstations remain the foundation for the daily computing tasks of engineers, scientists, and developers around the world. Businesses must have a way to determine the best systems for their needs—and computer vendors need a reliable way to gauge the performance of their systems against their competitors,” said Sakthivel. “The new SPECworkstation 4.0 benchmark includes real-world applications and workloads to enable fair and accurate comparisons of computing performance, including for the rising number of AI/ML and data analytics use cases.”

Key new features of the benchmark include:

- Support for AI/ML—The benchmark includes a set of tests focusing on AI and ML workloads, including data science tasks and ONNX run-time-based inference tests.

- New workloads—Among these are:

- Autodesk Inventor: Measures key performance metrics in Inventor, widely used in the architecture, engineering, and construction (AEC) segment.

- LLVM-Clang: Measure code compilation performance using the LLVM compiler and toolchain.

- Data science: Represents a data scientist’s workflow, including data scrubbing, ETL (extract, transform, load), and classical ML operations using tools such as NumPy, Pandas, Scikit-learn, and XGBoost.

- Hidden line removal: Measures the time taken to remove occluded edges from wireframe models.

- MFEM: Uses finite element methods to perform dynamic adaptive mesh refinement (AMR).

- ONNX inference: Benchmarks AI/ML inference latency and throughput using the ONNX run-time framework for evaluating machine learning models.

- Updated CPU workloads: This includes Blender, Handbrake, NAMD, and Octave to improve relevance, accuracy, and compatibility for performance measurements.

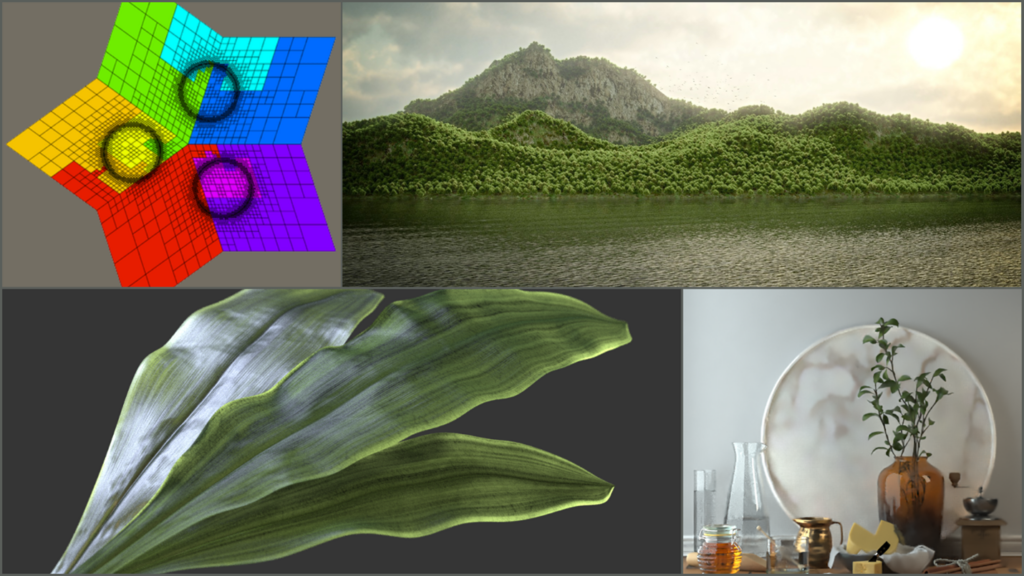

- Updated graphics workloads: Tests adapted from the SPECviewperf2020 v3.1 measure the performance of professional graphics cards using application-based traces.

- New accelerator subsystem—For measuring and understanding accelerator performance in the workstation environment.

- Enhanced user interfaces—A completely redesigned UI simplifies the benchmarking process, making it more user-friendly, and a new command line interface (CLI) enables easier automation.

The SPECworkstation 4.0 benchmark evaluates inference latency and throughput performance across different quantization approaches and batch sizes, including FP32, FP16, and INT8. This reflects the industry’s ongoing evolution towards more efficient AI models. By offering a comprehensive set of performance metrics, this benchmark helps scientists and developers understand the capabilities of their workstation hardware, says SPEC, and enables them to make informed decisions to optimize their systems for better performance in real-world AI/ML applications.

According to SPEC, as enterprises increasingly focus on building infrastructure to support AI-powered use cases, having a reliable benchmark is more critical than ever, as more data scientists and software developers rely on workstations for data science, data analytics, and ML. SPECworkstation 4.0 accurately measures a workstation’s performance across the entire data science pipeline, from data acquisition and cleaning to ETL processes and algorithm development.

The SPECworkstation 4.0 benchmark is available now for download. It is free for the user community and costs $5,000 for vendors of computer-related products and services.