Twenty-five years ago, Nvidia introduced the NV10, used in the GeForce 256, and coined the term “graphics processing unit,” or (GPU). Initially referring to a chip, the term now encompasses various meanings, including graphics add-in boards, AI accelerators, and entire data center systems. Nvidia’s Tesla V100 and DGX-1 expanded the term’s scope. Today, even racks of chassis are called GPUs, blurring lines between chip, board, pod, and system. Competitors building AI-focused ASICs face nomenclature challenges as the term “GPU” dominates conversations.

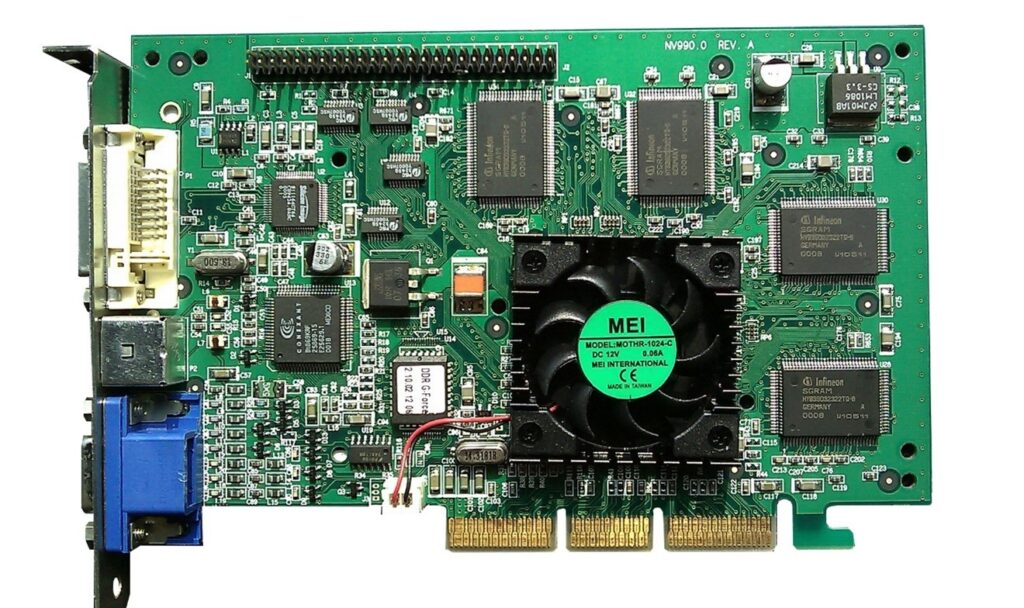

When Nvidia introduced its NV10, which was used on the GeForce 256 AIB this month 25 years ago, they labored to come up with the name—a graphics processing unit. The name quickly caught on, and the acronym is now part of our vocabulary. As the population of users of the term has expanded, the percentage of people within it who clearly understand what a GPU is and isn’t has diminished and the term has taken on new meanings.

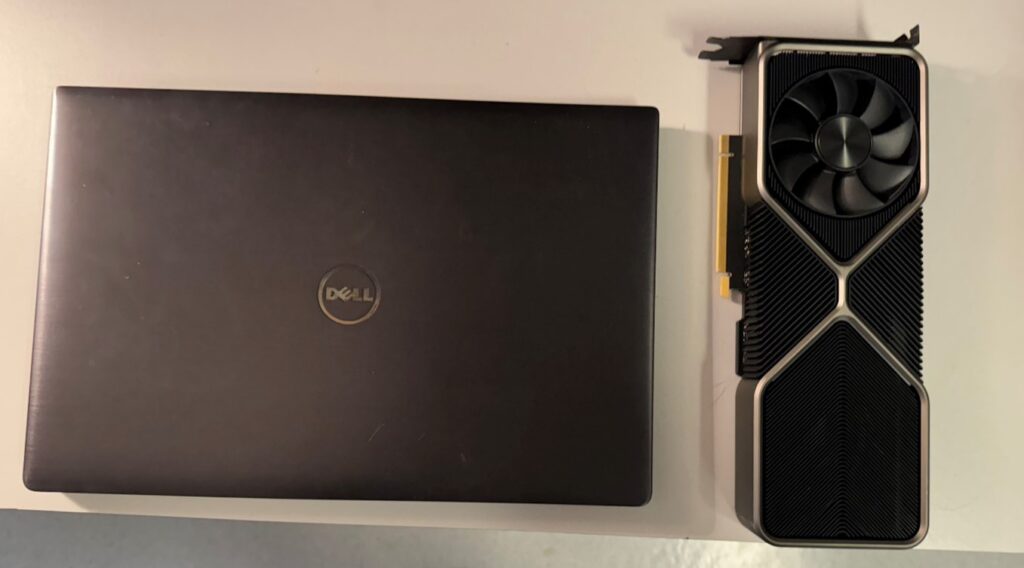

The acronym expanded in the second decade to mean a graphics add-in board, commonly called a card. And even though a GPU is a chip and all that can be fitted into a notebook, people refer to the GPU card in a notebook. Ironically, a high-end AIB costs as much or more than a notebook and draws more power.

When the GPU was adopted for ML and AI training, the first instances used workstations and high-end gaming AIBs. Nvidia’s first AI-focused GPU was the Tesla V100, which was announced in 2017, but its first GPU specifically designed for AI acceleration was the Tesla P4, which was announced in 2016. The AIB, however, was referred to as a GPU, not an AIB or a card.

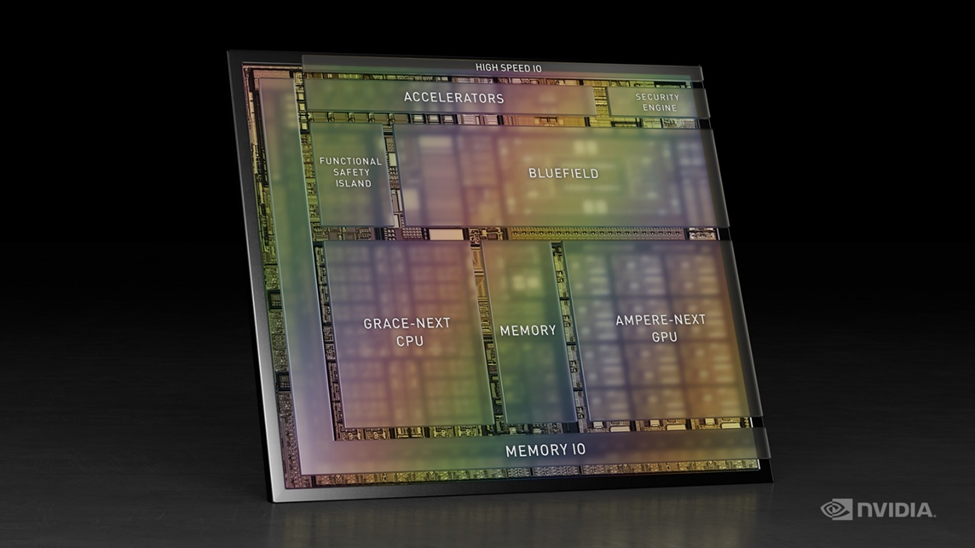

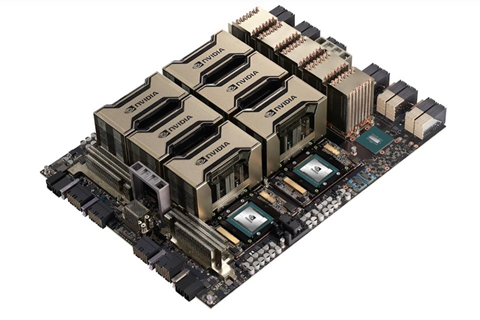

Nvidia introduced its first AI-focused data center system, the DGX-1, in 2016, and its first AI-focused pod-like solution, the Nvidia DGX Pod, was announced in 2018. Both quickly became known as GPUs. It wasn’t an Nvidia DGX Pod but an Nvidia GPU Pod.

And even an entire rack of DGX chassis gets called an AI GPU.

So, whereas we once thought we knew what a GPU was, today we have to ask the speaker, uh, ’scuse me, but what kinda GPU are you tawkin’ ’bout, a chip, board, pod, chassis, or rack?

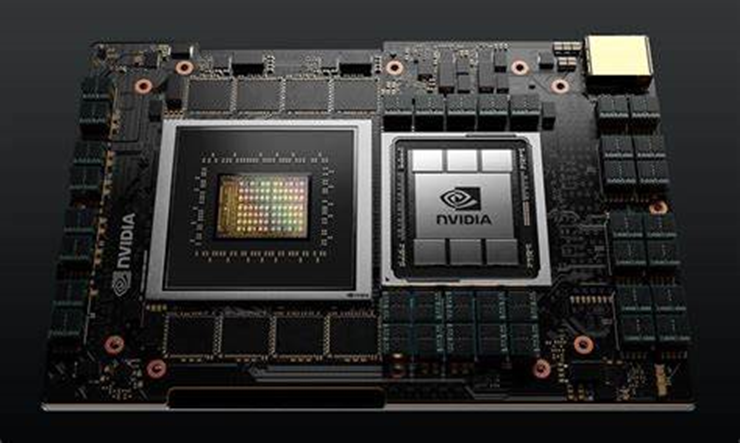

Before AI took over our lives, vocabulary, and entire conversations, we referred to AIBs installed in a rack chassis in a server as a compute accelerator. Then, that got changed to an AI accelerator, and today, it’s just a GPU. That nomenclature slide is problematic for competitors building ASICs for AI training, like Cerebras, Groq, or Intel with its Gaudi.

We’ve come a long way in the evolution of the GPU in the last 25 years.