Flow Computing, a Helsinki-based start-up, was founded in 2024 as a spinout from VTT Technical Research Center of Finland. The company develops technology enhancing CPU performance for demanding applications like locally hosted AI and parallel computing. Its patented Parallel Processing Unit (PPU) architecture and compiler ecosystem provide notable acceleration. The design is customizable, scalable, and backward compatible with existing software. Flow Computing will license its IP, focusing on optimizing workflow assignments for application developers.

Flow Computing, a start-up based in Helsinki, Finland, was founded in 2024 as a spinout from the VTT Technical Research Center of Finland. The company is developing technology to enhance CPU performance for demanding applications like locally hosted AI and parallel computing.

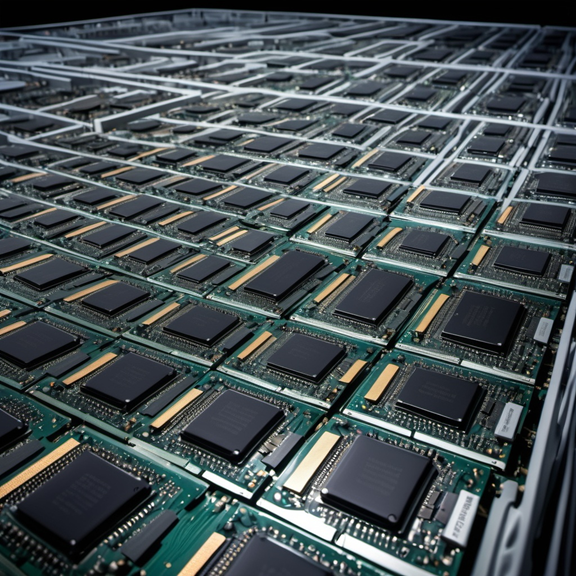

The company claims its design can be integrated with various architectures and process geometries. The company says when integrated, it provides significant acceleration for standard products, enables increased throughput, and reduces reliance on GPU acceleration for CPU instructions.

Flow Computing’s patented Parallel Processing Unit (PPU) architecture and compiler ecosystem make the performance improvement possible, the company says, offering a notable boost in processing capabilities.

IP for PPU for you

The PPU is an IP block that is tightly integrated with a CPU on the same silicon. It is designed to be highly configurable to meet the specific requirements of numerous use cases.

Flow says CPU modifications are minimal to integrate the PPU interface into the instruction set and update the CPU cores to leverage new performance levels.

The company says its parametric design allows extensive customization, including:

- Number of cores in PPU (four, 16, 64, 256, etc.)

- Number and type of functional units (such as ALUs, FPUs, MUs, GUs, NUs)

- Size of on-chip memory resources (caches, buffers, scratchpads)

- Instruction set modifications to complement the CPU’s instruction set extension

Performance enhancement scales with the number of PPU cores. A 16-core PPU is ideal for small devices like smartwatches; a 64-core PPU fits well in smartphones and PCs; and a 256-core PPU is best suited for high-demand environments like AI, cloud, and edge computing servers.

The company’s design has key features for its performance boost claims.

Latency hiding. Memory access, especially shared access, represents a big challenge for current multicore CPUs. Memory references slow down execution, and the intercore communication network causes additional latency. Traditional cache hierarchies can cause coherency and scalability problems.

Flow says its PPU design hides the latency of memory references by executing other threads while accessing the memory. As a result, there are no coherency problems since no caches are placed in the front of the network. Scalability is provided via a high-bandwidth network-on-chip.

Synchronization. The use of parallelism bycurrent multicore CPUs can cause additional challenges due to the inherent asynchronicity of the CPU’s processor cores. Thread synchronization is required whenever there are interthread dependencies. Those synchronizations are very time-consuming (taking 100 to 1,000 clock cycles).

Flow’s PPU synchronizations, claims the company, are only needed once per step because the threads are independent of each other within a step (dropping the time down to one clock cycle). Synchronizations are overlapped with the execution (dropping the time down to 1/100).

Virtual instruction-level parallelism and low-level parallelism. Flow says multicore CPUs currently handle low-level parallelism sub-optimally. Multiple instructions can be executed in various functional units only if independent. Pipeline hazards slow down instruction execution.

In the Flow PPU, functional units are organized as a chain, where each unit can use the results of its predecessors as operands. Dependent code execution is possible within a step of execution, and pipeline hazards are eliminated.

Flow says its technology will be backward compatible with all existing legacy software and applications. The PPU’s compiler automatically recognizes parallel parts of the code and executes them in PPU cores.

Also, Flow is developing an AI tool to help application and software developers identify parallel parts of the code and propose streamlining methods for maximum performance.

The team has run hundreds of simulations while at VTT to test and back up their claims.

The company is working on a parallel processor compiler to optimize workflow assignments for application developers who want to achieve maximum performance. The start-up doesn’t have any fantasies about entering the semiconductor business and plans to be strictly an IP provider.