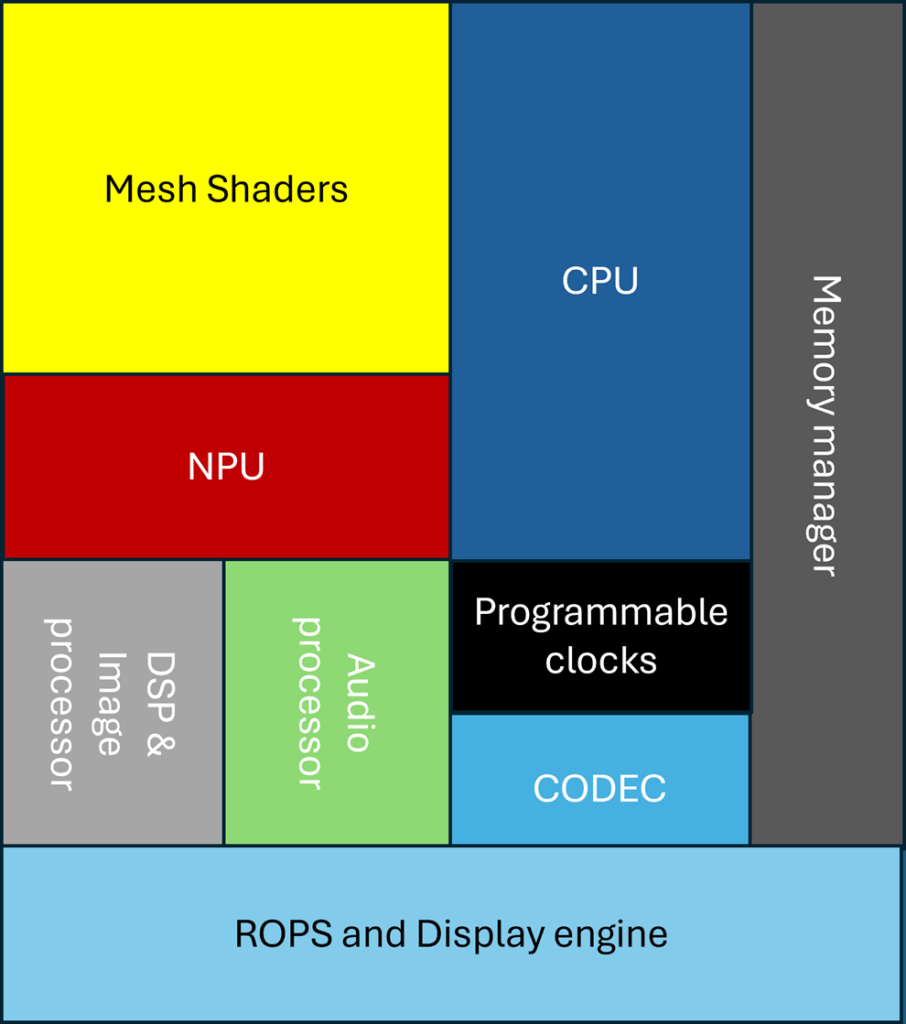

Nvidia’s first fully integrated GPU, introduced in 1999, featured transform and lighting processors. In 2003, Nvidia added a video codec engine and audio processing unit, followed by the G80 Tesla GPU in 2006, which included a programmable DSP and memory manager. The introduction of tensor cores in 2017 marked the beginning of AI processors. Over the years, Nvidia, Qualcomm, Intel, and AMD have advanced their designs, integrating CPUs and GPUs into versatile SoCs and evolving GPUs into general-purpose computing platforms.

When Nvidia introduced the first fully integrated GPU in October 1999, 25 years ago, it had a ROPS engine and, most importantly, four transform and lighting processors—the heart of a GPU.

In 2003, Nvidia introduced a hard-wired video codec engine and an integrated audio processing unit (APU) to its GPUs with the introduction of the NV30, which was used on the GeForce FX 5200/6200 AIBs.

A few years later, in 2006, the company released the G80 Tesla GPU with a DSP, which gave it a programmable codec and image processor. The G80 also had the first programmable memory manager. So now, a GPU had five processors: a ROPS engine, T&L processors, a memory manager, an APU, and a programmable DSP.

Qualcomm introduced its MSM7200 in 2008 with its Adreno 130 GPU (ATI Imageon Z430). Qualcomm was already (since 2004) incorporating a DSP in its SoC.

In 2010, Intel introduced its Clarksdale CPU with built-in GPU.

AMD quickly followed in 2011 and introduced its APU, a GPU (with DSP, memory manager, and ROPS) and an x86 CPU. The processor count went up to six.

Over the next few years, the number of SIMD processors (Nvidia started calling them CUDA processors) increased rapidly, following Moore’s law and TSMC’s fab capabilities.

Qualcomm introduced its Snapdragon 820 in 2015 with the Hexagon 680, an AI DSP with HVX (Hexagon Vector Extensions).

The big breakthrough came in 2017 when Nvidia introduced the Volta (V100) with 640 tensor cores—the age of the AI processor had arrived. The Tesla V100 deep learning accelerator (DLA) was the precursor to the now-popular NPU—a programmable matrix-math processor. Nvidia now had six processors in a GPU.

In 2018, the SIMDs evolved from fixed to ASPs to GPs and, finally, mesh shaders. With each generation, the GPU became more like a GP computer, and API providers and applications tried to keep up.

In 2021, Nvidia caught up to AMD and introduced a GPU with an integrated CPU but an Arm CPU, not an x86.

Now, all the major suppliers have a sea of processors, some called CPUs, some GPUs, and others SoCs. But they were all the same, with varying differences in the effort (design) they put into each processor.